Premium Only Content

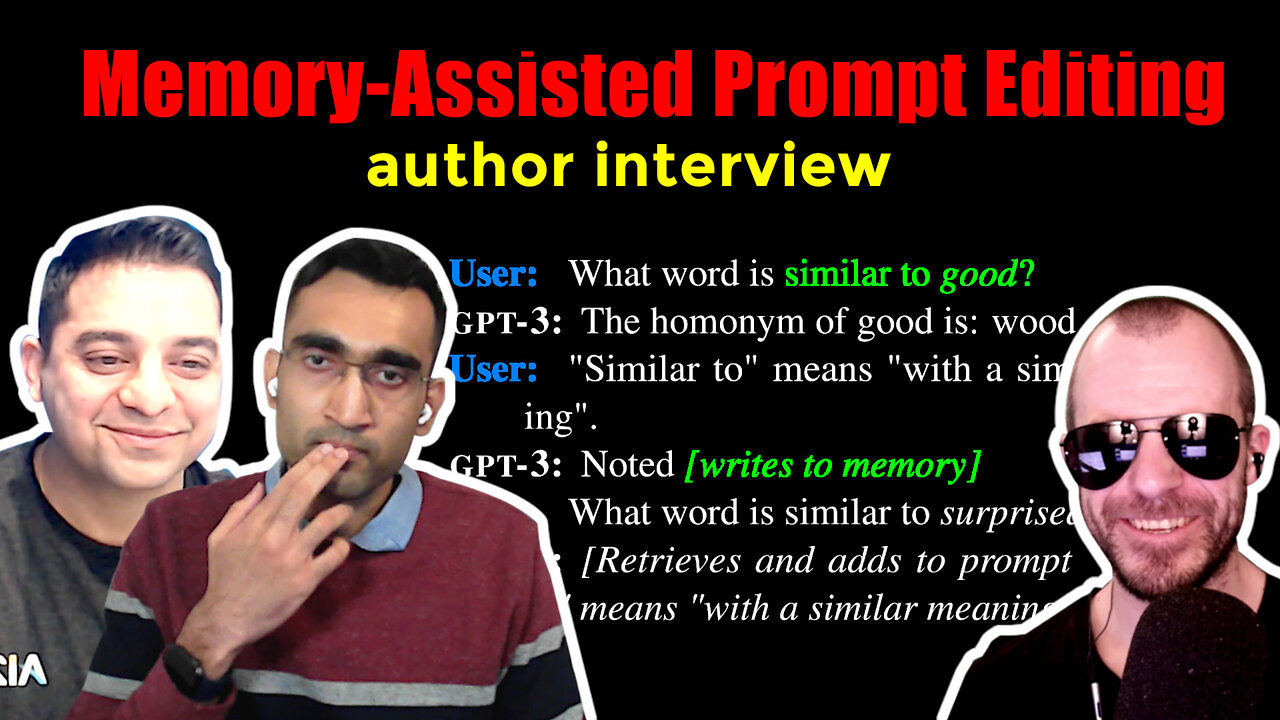

Author Interview - Memory-assisted prompt editing to improve GPT-3 after deployment

#nlp #gpt3 #prompt

This is an interview with the authors of this work, Aman Madaan and Niket Tandon.

Large language models such as GPT-3 have enabled many breakthroughs and new applications recently, but they come with an important downside: Training them is very expensive, and even fine-tuning is often difficult. This paper presents an adaptive method to improve performance of such models after deployment, without ever changing the model itself. This is done by maintaining a memory of interactions and then dynamically adapting new prompts by augmenting them with memory content. This has many applications, from non-intrusive fine-tuning to personalization.

OUTLINE:

0:00 - Intro

0:45 - Paper Overview

2:00 - What was your original motivation?

4:20 - There is an updated version of the paper!

9:00 - Have you studied this on real-world users?

12:10 - How does model size play into providing feedback?

14:10 - Can this be used for personalization?

16:30 - Discussing experimental results

17:45 - Can this be paired with recommender systems?

20:00 - What are obvious next steps to make the system more powerful?

23:15 - Clarifying the baseline methods

26:30 - Exploring cross-lingual customization

31:00 - Where did the idea for the clarification prompt come from?

33:05 - What did not work out during this project?

34:45 - What did you learn about interacting with large models?

37:30 - Final thoughts

Paper: https://arxiv.org/abs/2201.06009

Code & Data: https://github.com/madaan/memprompt

Abstract:

Large LMs such as GPT-3 are powerful, but can commit mistakes that are obvious to humans. For example, GPT-3 would mistakenly interpret "What word is similar to good?" to mean a homonym, while the user intended a synonym. Our goal is to effectively correct such errors via user interactions with the system but without retraining, which will be prohibitively costly. We pair GPT-3 with a growing memory of recorded cases where the model misunderstood the user's intents, along with user feedback for clarification. Such a memory allows our system to produce enhanced prompts for any new query based on the user feedback for error correction on similar cases in the past. On four tasks (two lexical tasks, two advanced ethical reasoning tasks), we show how a (simulated) user can interactively teach a deployed GPT-3, substantially increasing its accuracy over the queries with different kinds of misunderstandings by the GPT-3. Our approach is a step towards the low-cost utility enhancement for very large pre-trained LMs. All the code and data is available at this https URL.

Authors: Aman Madaan, Niket Tandon, Peter Clark, Yiming Yang

Links:

Merch: store.ykilcher.com

TabNine Code Completion (Referral): http://bit.ly/tabnine-yannick

YouTube: https://www.youtube.com/c/yannickilcher

Twitter: https://twitter.com/ykilcher

Discord: https://discord.gg/4H8xxDF

BitChute: https://www.bitchute.com/channel/yann...

LinkedIn: https://www.linkedin.com/in/ykilcher

BiliBili: https://space.bilibili.com/2017636191

If you want to support me, the best thing to do is to share out the content :)

If you want to support me financially (completely optional and voluntary, but a lot of people have asked for this):

SubscribeStar: https://www.subscribestar.com/yannick...

Patreon: https://www.patreon.com/yannickilcher

Bitcoin (BTC): bc1q49lsw3q325tr58ygf8sudx2dqfguclvngvy2cq

Ethereum (ETH): 0x7ad3513E3B8f66799f507Aa7874b1B0eBC7F85e2

Litecoin (LTC): LQW2TRyKYetVC8WjFkhpPhtpbDM4Vw7r9m

Monero (XMR): 4ACL8AGrEo5hAir8A9CeVrW8pEauWvnp1WnSDZxW7tziCDLhZAGsgzhRQABDnFy8yuM9fWJDviJPHKRjV4FWt19CJZN9D4n

-

1:22:33

1:22:33

Dialogue works

1 day ago $14.05 earnedScott Ritter: Putin Warns Europe: “We’re Ready Right Now”

88.4K68 -

13:14

13:14

itsSeanDaniel

2 days agoIlhan Omar EXPOSED for LYING about Somalian Fraud

22.7K39 -

1:52:46

1:52:46

Side Scrollers Podcast

23 hours agoNintendo Fans Are PISSED at Craig + Netflix BUYS Warner Bros + VTube DRAMA + More | Side Scrollers

105K6 -

18:43

18:43

Nikko Ortiz

17 hours agoWorst Karen Internet Clips...

21.3K6 -

11:23

11:23

MattMorseTV

18 hours ago $16.77 earnedTrump just RAMPED IT UP.

37.7K65 -

46:36

46:36

MetatronCore

2 days agoHasan Piker at Trigernometry

19.8K7 -

29:01

29:01

The Pascal Show

20 hours ago $6.48 earnedRUNNING SCARED! Candace Owens DESTROYS TPUSA! Are They Backing Out?!

29.2K24 -

6:08:30

6:08:30

Dr Disrespect

22 hours ago🔴LIVE - DR DISRESPECT - ARC RAIDERS - FREE LOADOUT EXPERT

77K7 -

2:28:08

2:28:08

PandaSub2000

1 day agoMyst (Part 1) | MIDNIGHT ADVENTURE CLUB (Edited Replay)

45.8K -

21:57

21:57

GritsGG

1 day agoBO7 Warzone Patch Notes! My Thoughts! (Most Wins in 13,000+)

48.5K