Premium Only Content

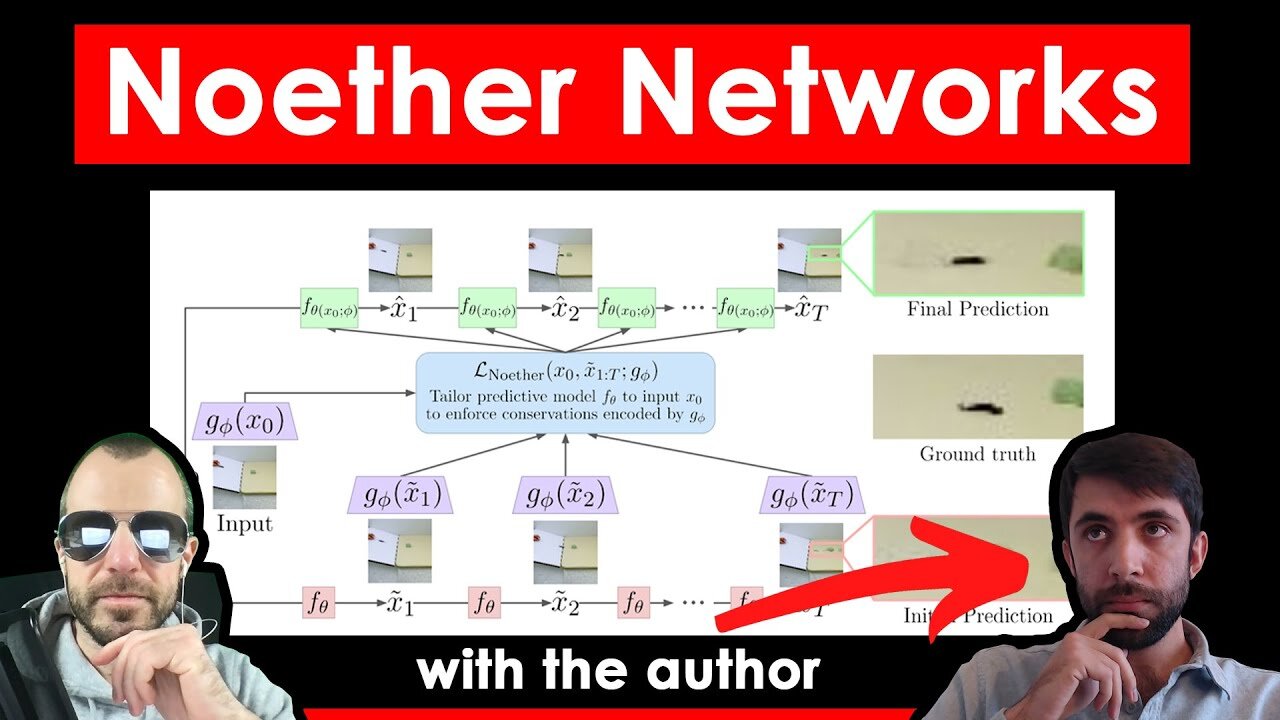

Noether Networks: Meta-Learning Useful Conserved Quantities (w/ the authors)

#deeplearning #noether #symmetries

This video includes an interview with first author Ferran Alet!

Encoding inductive biases has been a long established methods to provide deep networks with the ability to learn from less data. Especially useful are encodings of symmetry properties of the data, such as the convolution's translation invariance. But such symmetries are often hard to program explicitly, and can only be encoded exactly when done in a direct fashion. Noether Networks use Noether's theorem connecting symmetries to conserved quantities and are able to dynamically and approximately enforce symmetry properties upon deep neural networks.

OUTLINE:

0:00 - Intro & Overview

18:10 - Interview Start

21:20 - Symmetry priors vs conserved quantities

23:25 - Example: Pendulum

27:45 - Noether Network Model Overview

35:35 - Optimizing the Noether Loss

41:00 - Is the computation graph stable?

46:30 - Increasing the inference time computation

48:45 - Why dynamically modify the model?

55:30 - Experimental Results & Discussion

Paper: https://arxiv.org/abs/2112.03321

Website: https://dylandoblar.github.io/noether...

Code: https://github.com/dylandoblar/noethe...

Abstract:

Progress in machine learning (ML) stems from a combination of data availability, computational resources, and an appropriate encoding of inductive biases. Useful biases often exploit symmetries in the prediction problem, such as convolutional networks relying on translation equivariance. Automatically discovering these useful symmetries holds the potential to greatly improve the performance of ML systems, but still remains a challenge. In this work, we focus on sequential prediction problems and take inspiration from Noether's theorem to reduce the problem of finding inductive biases to meta-learning useful conserved quantities. We propose Noether Networks: a new type of architecture where a meta-learned conservation loss is optimized inside the prediction function. We show, theoretically and experimentally, that Noether Networks improve prediction quality, providing a general framework for discovering inductive biases in sequential problems.

Authors: Ferran Alet, Dylan Doblar, Allan Zhou, Joshua Tenenbaum, Kenji Kawaguchi, Chelsea Finn

Links:

TabNine Code Completion (Referral): http://bit.ly/tabnine-yannick

YouTube: https://www.youtube.com/c/yannickilcher

Twitter: https://twitter.com/ykilcher

Discord: https://discord.gg/4H8xxDF

BitChute: https://www.bitchute.com/channel/yann...

LinkedIn: https://www.linkedin.com/in/ykilcher

BiliBili: https://space.bilibili.com/2017636191

If you want to support me, the best thing to do is to share out the content :)

If you want to support me financially (completely optional and voluntary, but a lot of people have asked for this):

SubscribeStar: https://www.subscribestar.com/yannick...

Patreon: https://www.patreon.com/yannickilcher

Bitcoin (BTC): bc1q49lsw3q325tr58ygf8sudx2dqfguclvngvy2cq

Ethereum (ETH): 0x7ad3513E3B8f66799f507Aa7874b1B0eBC7F85e2

Litecoin (LTC): LQW2TRyKYetVC8WjFkhpPhtpbDM4Vw7r9m

Monero (XMR): 4ACL8AGrEo5hAir8A9CeVrW8pEauWvnp1WnSDZxW7tziCDLhZAGsgzhRQABDnFy8yuM9fWJDviJPHKRjV4FWt19CJZN9D4n

-

LIVE

LIVE

Pickleball Now

3 hours agoLive: IPBL 2025 Day 5 | Final Day of League Stage Set for Explosive Showdowns

103 watching -

9:03

9:03

MattMorseTV

15 hours ago $8.65 earnedIlhan Omar just got BAD NEWS.

13.5K74 -

2:02:41

2:02:41

Side Scrollers Podcast

19 hours agoMetroid Prime 4 ROASTED + Roblox BANNED for LGBT Propaganda + The “R-Word” + More | Side Scrollers

92.1K14 -

16:38

16:38

Nikko Ortiz

14 hours agoVeteran Tactically Acquires Everything…

12.5K1 -

20:19

20:19

MetatronHistory

2 days agoThe Mystery of Catacombs of Paris REVEALED

7.29K2 -

21:57

21:57

GritsGG

18 hours agoBO7 Warzone Patch Notes! My Thoughts! (Most Wins in 13,000+)

11.6K -

2:28:08

2:28:08

PandaSub2000

11 hours agoMyst (Part 1) | MIDNIGHT ADVENTURE CLUB (Edited Replay)

8.02K -

1:12:43

1:12:43

TruthStream with Joe and Scott

5 days agoJason Van Blerk from Human Garage: Reset your life with Fascial Maneuvers,28 day reset, Healing, Spiritual Journey, Censorship, AI: Live 12/3 #520

17.1K4 -

24:21

24:21

The Pascal Show

1 day ago $8.98 earned'CHALLENGE ACCEPTED!' TPUSA Breaks Silence On Candace Owens Charlie Kirk Allegations! She Responds!

38.1K28 -

17:41

17:41

MetatronGaming

2 days agoI should NOT Have taken the elevator...

12.4K2