Premium Only Content

This video is only available to Rumble Premium subscribers. Subscribe to

enjoy exclusive content and ad-free viewing.

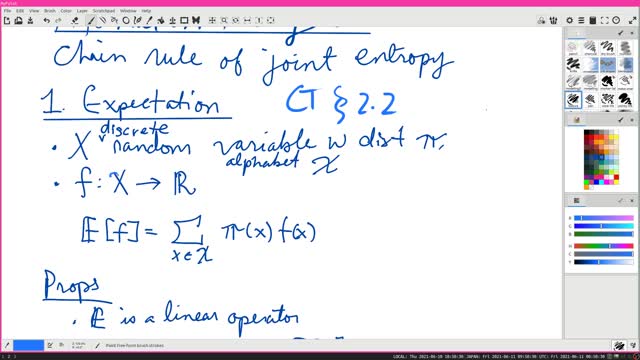

Chain Rule of Joint Entropy | Information Theory 5 | Cover-Thomas Section 2.2

4 years ago

15

H(X, Y) = H(X) + H(Y | X). In other words, the entropy (= uncertainty) of two variables is the entropy of one, plus the conditional entropy of the other. In particular, if the variables are independent, then the uncertainty of two independent variables is the sum of the two uncertainties.

#InformationTheory #CoverThomas

Loading comments...

-

33:03

33:03

Dr. Ajay Kumar PHD (he/him)

3 years agoAll triangles with equal base and equal area have equal height | Euclid's Elements Book 1 Prop 39

34 -

57:16

57:16

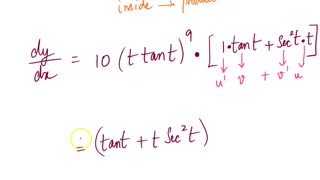

Calculus Lectures

4 years agoMath4A Lecture Overview MAlbert CH3 | 6 Chain Rule

80 -

2:40

2:40

KGTV

4 years agoPatient information compromised

341 -

25:38

25:38

The Rubin Report

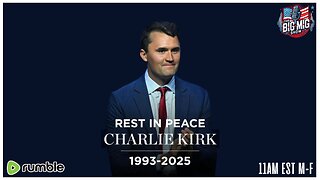

3 hours agoRemembering Charlie Kirk & 9/11

209K24 -

1:00:32

1:00:32

VINCE

4 hours agoRest In Peace Charlie Kirk | Episode 123 - 09/11/25

324K304 -

LIVE

LIVE

LFA TV

7 hours agoLFA TV ALL DAY STREAM - THURSDAY 9/11/25

4,829 watching -

LIVE

LIVE

Bannons War Room

6 months agoWarRoom Live

7,995 watching -

25:21

25:21

The Shannon Joy Show

2 hours agoA message of encouragement and a call for faith and unity after the tragic killing of Charlie Kirk

65.3K13 -

1:31:37

1:31:37

The Big Mig™

3 hours agoIn Honor Of Charlie Kirk, Rest In Peace 🙏🏻

54K14 -

1:36:35

1:36:35

The White House

5 hours agoPresident Trump and the First Lady Attend a September 11th Observance Event

108K43