Premium Only Content

This video is only available to Rumble Premium subscribers. Subscribe to

enjoy exclusive content and ad-free viewing.

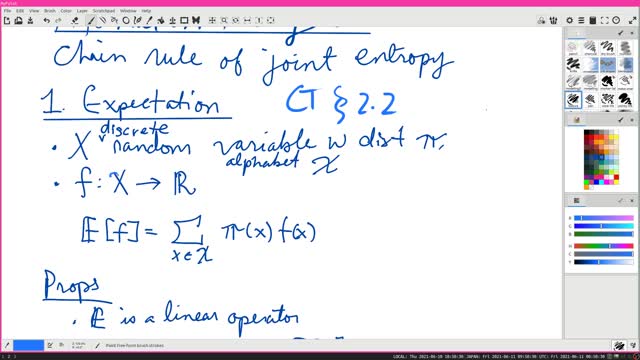

Chain Rule of Joint Entropy | Information Theory 5 | Cover-Thomas Section 2.2

4 years ago

16

H(X, Y) = H(X) + H(Y | X). In other words, the entropy (= uncertainty) of two variables is the entropy of one, plus the conditional entropy of the other. In particular, if the variables are independent, then the uncertainty of two independent variables is the sum of the two uncertainties.

#InformationTheory #CoverThomas

Loading comments...

-

12:26

12:26

Dr. Ajay Kumar PHD (he/him)

3 years agoBitcoin is Obvious | Euclid's Elements Book 1 Prop 41

13 -

57:16

57:16

Calculus Lectures

5 years agoMath4A Lecture Overview MAlbert CH3 | 6 Chain Rule

82 -

2:40

2:40

KGTV

4 years agoPatient information compromised

361 -

59:25

59:25

VINCE

2 hours agoFINALLY: Jan 6th Pipe Bomber Arrested? | Episode 181 - 12/04/25 VINCE

161K86 -

LIVE

LIVE

LFA TV

13 hours agoLIVE & BREAKING NEWS! | THURSDAY 12/04/25

4,014 watching -

LIVE

LIVE

Chad Prather

1 hour agoCandace Owens ACCEPTS TPUSA’s Debate Invitation + Dem Rep Instructs Military To REMOVE Trump?!

631 watching -

1:32:47

1:32:47

Graham Allen

3 hours agoCandace Owens vs TPUSA! It’s Time To End This Before It Destroys Everything Charlie Fought For!

108K536 -

LIVE

LIVE

Badlands Media

10 hours agoBadlands Daily: 12/4/25

3,954 watching -

2:14:23

2:14:23

Matt Kohrs

13 hours agoLive Trading Stock Market Open (Futures & Options) || The Matt Kohrs Show

9.65K -

LIVE

LIVE

Wendy Bell Radio

6 hours agoEnemy of the State

6,748 watching