Premium Only Content

This video is only available to Rumble Premium subscribers. Subscribe to

enjoy exclusive content and ad-free viewing.

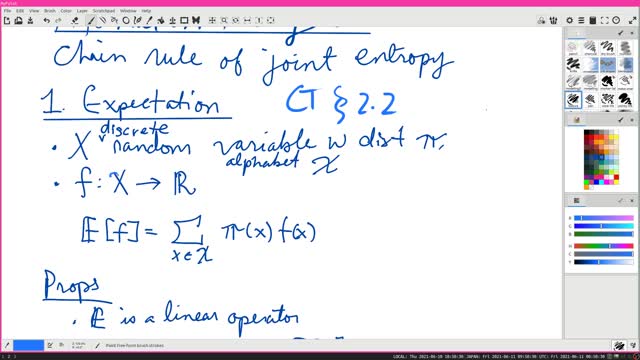

Chain Rule of Joint Entropy | Information Theory 5 | Cover-Thomas Section 2.2

4 years ago

16

H(X, Y) = H(X) + H(Y | X). In other words, the entropy (= uncertainty) of two variables is the entropy of one, plus the conditional entropy of the other. In particular, if the variables are independent, then the uncertainty of two independent variables is the sum of the two uncertainties.

#InformationTheory #CoverThomas

Loading comments...

-

12:26

12:26

Dr. Ajay Kumar PHD (he/him)

3 years agoBitcoin is Obvious | Euclid's Elements Book 1 Prop 41

13 -

57:16

57:16

Calculus Lectures

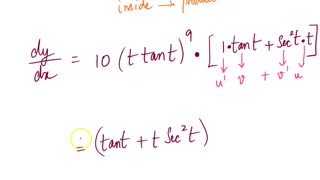

4 years agoMath4A Lecture Overview MAlbert CH3 | 6 Chain Rule

81 -

2:40

2:40

KGTV

4 years agoPatient information compromised

341 -

1:31:56

1:31:56

Michael Franzese

19 hours agoWill NBA do anything about their Gambling Problems?

119K26 -

57:26

57:26

X22 Report

9 hours agoMr & Mrs X - The Food Industry Is Trying To Pull A Fast One On RFK Jr (MAHA), This Will Fail - EP 14

93K60 -

2:01:08

2:01:08

LFA TV

1 day agoTHE RUMBLE RUNDOWN LIVE @9AM EST

149K12 -

1:28:14

1:28:14

On Call with Dr. Mary Talley Bowden

7 hours agoI came for my wife.

27.9K32 -

1:06:36

1:06:36

Wendy Bell Radio

12 hours agoPet Talk With The Pet Doc

66.6K32 -

30:58

30:58

SouthernbelleReacts

3 days ago $9.08 earnedWe Didn’t Expect That Ending… ‘Welcome to Derry’ S1 E1 Reaction

45.5K10 -

13:51

13:51

True Crime | Unsolved Cases | Mysterious Stories

5 days ago $20.15 earned7 Real Life Heroes Caught on Camera (Remastered Audio)

61.5K17