Premium Only Content

This video is only available to Rumble Premium subscribers. Subscribe to

enjoy exclusive content and ad-free viewing.

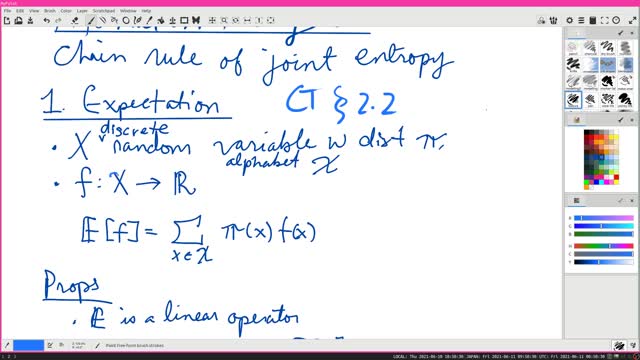

Chain Rule of Joint Entropy | Information Theory 5 | Cover-Thomas Section 2.2

4 years ago

16

H(X, Y) = H(X) + H(Y | X). In other words, the entropy (= uncertainty) of two variables is the entropy of one, plus the conditional entropy of the other. In particular, if the variables are independent, then the uncertainty of two independent variables is the sum of the two uncertainties.

#InformationTheory #CoverThomas

Loading comments...

-

11:24

11:24

Dr. Ajay Kumar PHD (he/him)

3 years agoAll triangles with the same base and height have equal area | Euclid's Elements Book 1 Prop 38

13 -

57:16

57:16

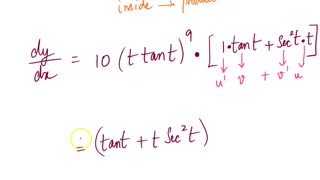

Calculus Lectures

4 years agoMath4A Lecture Overview MAlbert CH3 | 6 Chain Rule

81 -

2:40

2:40

KGTV

4 years agoPatient information compromised

341 -

48:57

48:57

Man in America

17 hours agoThe Sinister Reason They Put Fluoride in Everything w/ Larry Oberheu

350K89 -

1:06:56

1:06:56

Sarah Westall

14 hours agoAstrological Predictions, Epstein & Charlie Kirk w/ Kim Iversen

91.6K64 -

2:06:49

2:06:49

vivafrei

23 hours agoEp. 289: Arctic Frost, Boasberg Impeachment, SNAP Funding, Trump - China, Tylenol Sued & MORE!

275K202 -

2:56:28

2:56:28

IsaiahLCarter

18 hours ago $11.30 earnedThe Tri-State Commission, Election Weekend Edition || APOSTATE RADIO 033 (Guest: Adam B. Coleman)

54.6K7 -

15:03

15:03

Demons Row

13 hours ago $13.21 earnedThings Real 1%ers Never Do! 💀🏍️

68.9K22 -

35:27

35:27

megimu32

17 hours agoMEGI + PEPPY LIVE FROM DREAMHACK!

190K15 -

1:03:23

1:03:23

Tactical Advisor

20 hours agoNew Gun Unboxing | Vault Room Live Stream 044

272K41