Premium Only Content

This video is only available to Rumble Premium subscribers. Subscribe to

enjoy exclusive content and ad-free viewing.

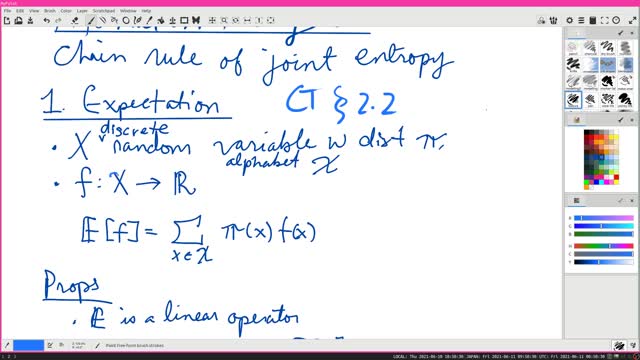

Chain Rule of Joint Entropy | Information Theory 5 | Cover-Thomas Section 2.2

4 years ago

16

H(X, Y) = H(X) + H(Y | X). In other words, the entropy (= uncertainty) of two variables is the entropy of one, plus the conditional entropy of the other. In particular, if the variables are independent, then the uncertainty of two independent variables is the sum of the two uncertainties.

#InformationTheory #CoverThomas

Loading comments...

-

33:03

33:03

Dr. Ajay Kumar PHD (he/him)

3 years ago $0.01 earnedAll triangles with equal base and equal area have equal height | Euclid's Elements Book 1 Prop 39

37 -

57:16

57:16

Calculus Lectures

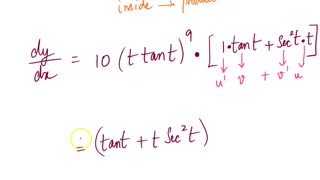

5 years agoMath4A Lecture Overview MAlbert CH3 | 6 Chain Rule

82 -

2:40

2:40

KGTV

4 years agoPatient information compromised

361 -

DVR

DVR

The Rubin Report

1 hour agoHost Gets Visibly Angry as Scott Bessent Rips Him to Shreds in Front of NY Times Crowd

6.14K7 -

LIVE

LIVE

iCkEdMeL

1 hour ago🔴 BREAKING: Brian Cole Identified as DC Pipe Bomb Suspect — FBI Arrest

284 watching -

LIVE

LIVE

LFA TV

14 hours agoLIVE & BREAKING NEWS! | THURSDAY 12/04/25

4,007 watching -

59:25

59:25

VINCE

3 hours agoFINALLY: Jan 6th Pipe Bomber Arrested? | Episode 181 - 12/04/25 VINCE

161K119 -

DVR

DVR

Chad Prather

2 hours agoCandace Owens ACCEPTS TPUSA’s Debate Invitation + Dem Rep Instructs Military To REMOVE Trump?!

15.1K11 -

LIVE

LIVE

Grant Stinchfield

1 hour agoBiden’s Inner Circle Cracks! It's the Presidency That Wasn’t!

118 watching -

1:32:47

1:32:47

Graham Allen

4 hours agoCandace Owens vs TPUSA! It’s Time To End This Before It Destroys Everything Charlie Fought For!

126K548