Premium Only Content

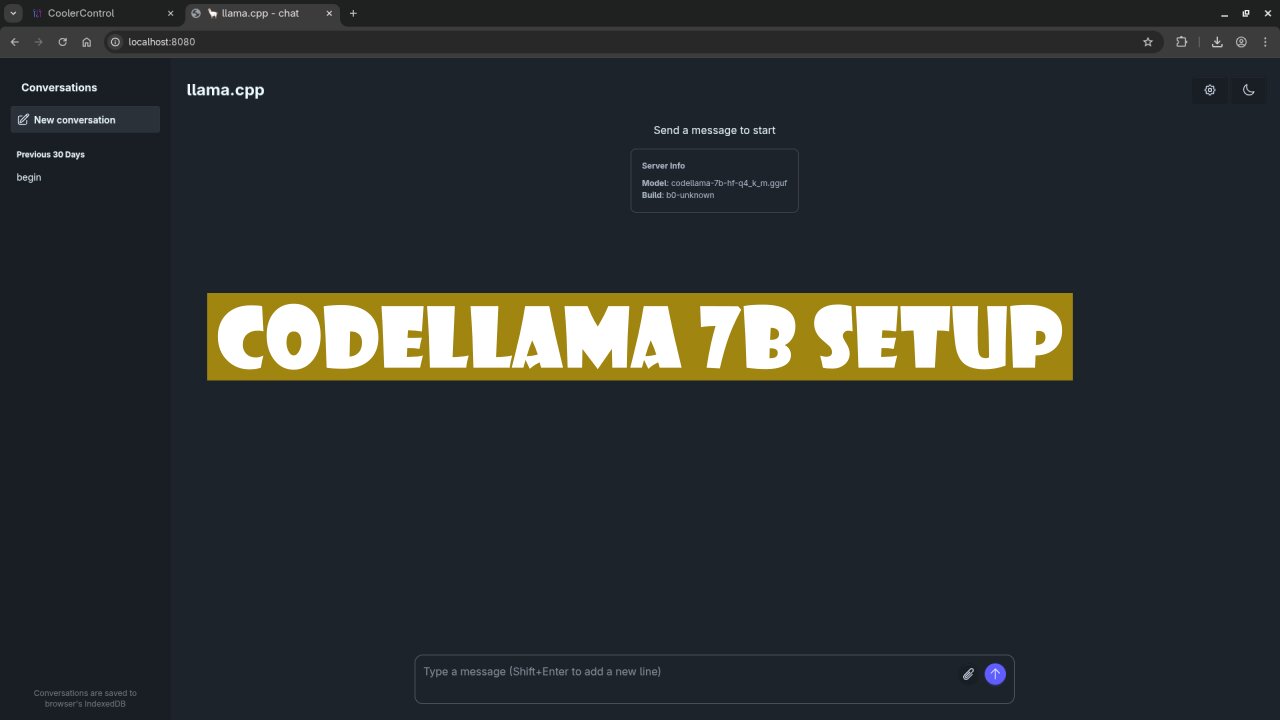

How to Set Up Codellama 7B with Llama.cpp WebUI on Linux | Complete AMD Instinct Mi60 Setup Guide!

Configuring Codellama 7B WebUI with Llama.cpp on Linux (AMD Instinct Mi60 GPU Setup)

Welcome to this step-by-step tutorial on configuring Codellama 7B with the Llama.cpp WebUI on a Linux system powered by the AMD Instinct Mi60 32GB HBM2 GPU. In this video, I walk you through the process of setting up Codellama 7B with easy-to-follow instructions, perfect for beginners and anyone looking to optimize their generative AI setup.

📌 What you will learn:

Installing Llama.cpp on Fedora Linux

Setting up Codellama 7B with ROCm support for AMD GPUs

Verifying hardware and software configurations

Running the Codellama WebUI to interact with the model locally

If you're looking to dive into AI models or need help configuring your own Codellama 7B setup, this screencast will be your complete guide!

💡 Want more? Check out the full blog post for more details on the installation and setup process:

https://ojambo.com/review-generative-ai-codellama-7b-hf-q4_k_m-gguf-model

🔧 Need help with installation or migration? I offer personalized services:

One-on-one Python tutorials: https://ojambo.com/contact

Codellama installation and migration services: https://ojamboservices.com/contact

#Codellama7B #LlamaCpp #AMDInstinct #MachineLearning #AIModels #WebUI #LinuxTutorial #GenerativeAI #Fedora #AMDROCm #PythonTutorial #AI #DeepLearning #Python #OpenSourceAI

-

21:35

21:35

OjamboShop

2 days agoStop Boring Websites: Build a Flashing Neon Star with HTML & CSS (EASY Tutorial!)

61 -

20:19

20:19

MetatronHistory

2 days agoThe Mystery of Catacombs of Paris REVEALED

11.7K2 -

21:57

21:57

GritsGG

18 hours agoBO7 Warzone Patch Notes! My Thoughts! (Most Wins in 13,000+)

18.1K -

2:28:08

2:28:08

PandaSub2000

12 hours agoMyst (Part 1) | MIDNIGHT ADVENTURE CLUB (Edited Replay)

8.02K -

1:12:43

1:12:43

TruthStream with Joe and Scott

5 days agoJason Van Blerk from Human Garage: Reset your life with Fascial Maneuvers,28 day reset, Healing, Spiritual Journey, Censorship, AI: Live 12/3 #520

17.1K4 -

24:21

24:21

The Pascal Show

1 day ago $8.98 earned'CHALLENGE ACCEPTED!' TPUSA Breaks Silence On Candace Owens Charlie Kirk Allegations! She Responds!

38.1K28 -

17:41

17:41

MetatronGaming

2 days agoI should NOT Have taken the elevator...

12.4K2 -

LIVE

LIVE

Lofi Girl

3 years agolofi hip hop radio 📚 - beats to relax/study to

464 watching -

1:20:23

1:20:23

Man in America

15 hours agoHow Epstein Blackmail & FBI Cover-Ups Are Fracturing MAGA w/ Ivan Raiklin

203K40 -

2:13:49

2:13:49

Inverted World Live

10 hours agoSolar Storms Ground 1000 Planes | Ep. 151

117K10