Premium Only Content

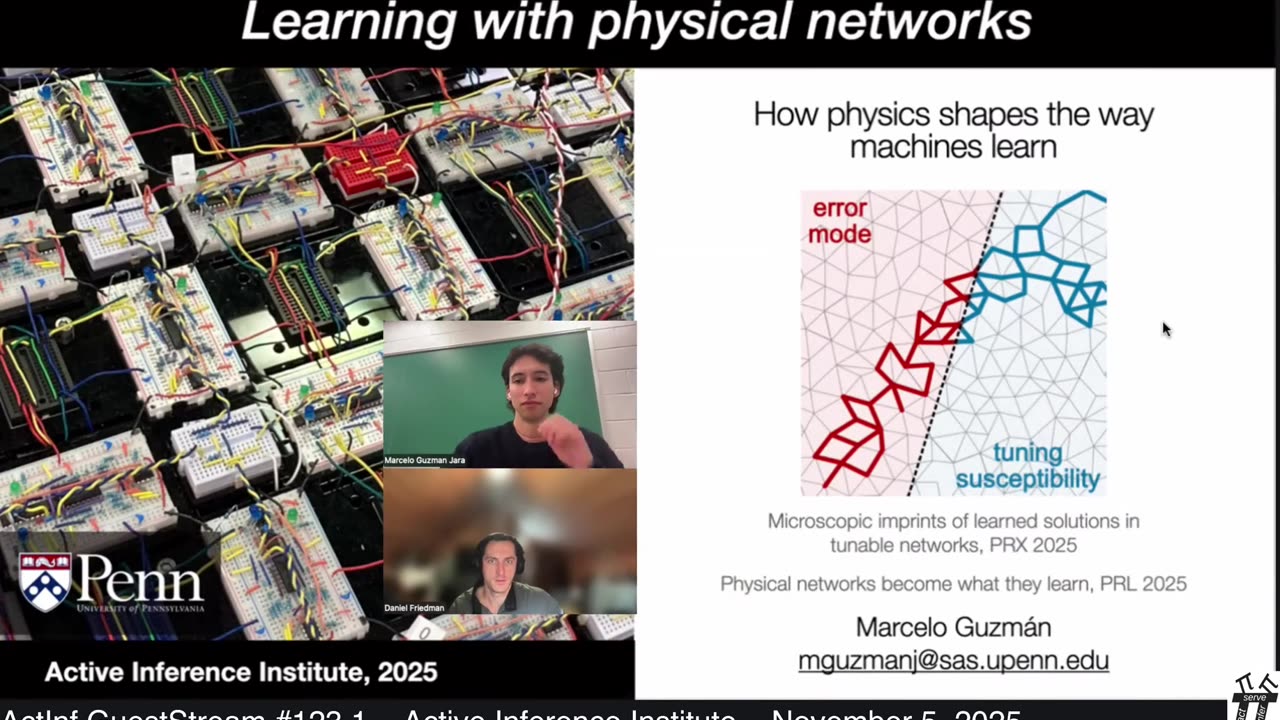

ActInf GuestStream 123.1 ~ Marcelo Guzman: "Learning in Physical Systems"

Physical networks become what they learn

Menachem Stern, Marcelo Guzman, Felipe Martins, Andrea J Liu, Vijay Balasubramanian

https://arxiv.org/abs/2406.09689

Physical networks can develop diverse responses, or functions, by design, evolution or learning. We focus on electrical networks of nodes connected by resistive edges. Such networks can learn by adapting edge conductances to lower a cost function that penalizes deviations from a desired response. The network must also satisfy Kirchhoff's law, balancing currents at nodes, or, equivalently, minimizing total power dissipation by adjusting node voltages. The adaptation is thus a double optimization process, in which a cost function is minimized with respect to conductances, while dissipated power is minimized with respect to node voltages. Here we study how this physical adaptation couples the cost landscape, the landscape of the cost function in the high-dimensional space of edge conductances, to the physical landscape, the dissipated power in the high-dimensional space of node voltages. We show how adaptation links the physical and cost Hessian matrices, suggesting that the physical response of networks to perturbations holds significant information about the functions to which they are adapted.

Microscopic Imprints of Learned Solutions in Tunable Networks

Marcelo Guzman, Felipe Martins, Menachem Stern, and Andrea J. Liu

https://journals.aps.org/prx/abstract/10.1103/f2hb-c9s1

In physical networks trained using supervised learning, physical parameters are adjusted to produce desired responses to inputs. An example is an electrical contrastive local learning network of nodes connected by edges that adjust their conductances during training. When an edge conductance changes, it upsets the current balance of every node. In response, physics adjusts the node voltages to minimize the dissipated power. Learning in these systems is therefore a coupled double-optimization process, in which the network descends both a cost landscape in the high-dimensional space of edge conductances and a physical landscape—the power dissipation—in the high-dimensional space of node voltages. Because of this coupling, the physical landscape of a trained network contains information about the learned task. Here, we derive a structure-function relation for trained tunable networks and demonstrate that all the physical information relevant to the trained input-output relation can be captured by a tuning susceptibility, an experimentally measurable quantity. We supplement our theoretical results with simulations to show that the tuning susceptibility is correlated with functional importance and that we can extract physical insight into how the system performs the task from the conductances of highly susceptible edges. Our analysis is general and can be applied directly to mechanical networks, such as networks trained for protein-inspired function such as allostery.

----

Active Inference Institute information:

Website: https://www.activeinference.institute/

Activities: https://activities.activeinference.institute/

Discord: https://discord.activeinference.institute/

Donate: http://donate.activeinference.institute/

YouTube: https://www.youtube.com/c/ActiveInference/

X: https://x.com/InferenceActive

Active Inference Livestreams: https://video.activeinference.institute/

-

1:43:29

1:43:29

Active Inference Institute

1 month agoActInf GuestStream 121.1 ~ Conscious active inference (Wiest and Puniani)

161 -

18:09

18:09

Professor Nez

1 hour ago🚨🔥HOLY MACKEREL! Chuck Schumer FLEES the Senate Floor when CONFRONTED on Obamacare FRAUD!

7 -

1:07:04

1:07:04

The White House

2 hours agoPresident Trump and Vice President JD Vance Deliver Remarks

309 -

LIVE

LIVE

The Shannon Joy Show

1 hour agoTrump & The Terrorist * MAHA Dead In DC? * Winter Weather Warfare: Live Exclusive W/ Dane Wigington

222 watching -

LIVE

LIVE

Grant Stinchfield

57 minutes agoHow Faith, Forgiveness, and Grit Keep the Oldest Among Us Alive and Thriving

50 watching -

1:01:55

1:01:55

VINCE

3 hours agoYoung Men Are Taking The Red Pill (Guest Host Hayley Caronia) | Episode 166 - 11/11/25 VINCE

144K88 -

LIVE

LIVE

LFA TV

14 hours agoLIVE & BREAKING NEWS! | TUESDAY 11/11/25

3,953 watching -

1:54:13

1:54:13

Nikko Ortiz

2 hours agoPainful Morning Fails... |Rumble Live

8.14K1 -

2:10:37

2:10:37

Badlands Media

11 hours agoBadlands Daily: November 11, 2025

30K11 -

1:30:38

1:30:38

Graham Allen

4 hours agoLibs Are SEETHING Over Shutdown Ending!! They’re Turning On Each other!! + Trump Calls Out MTG!!

128K644