Premium Only Content

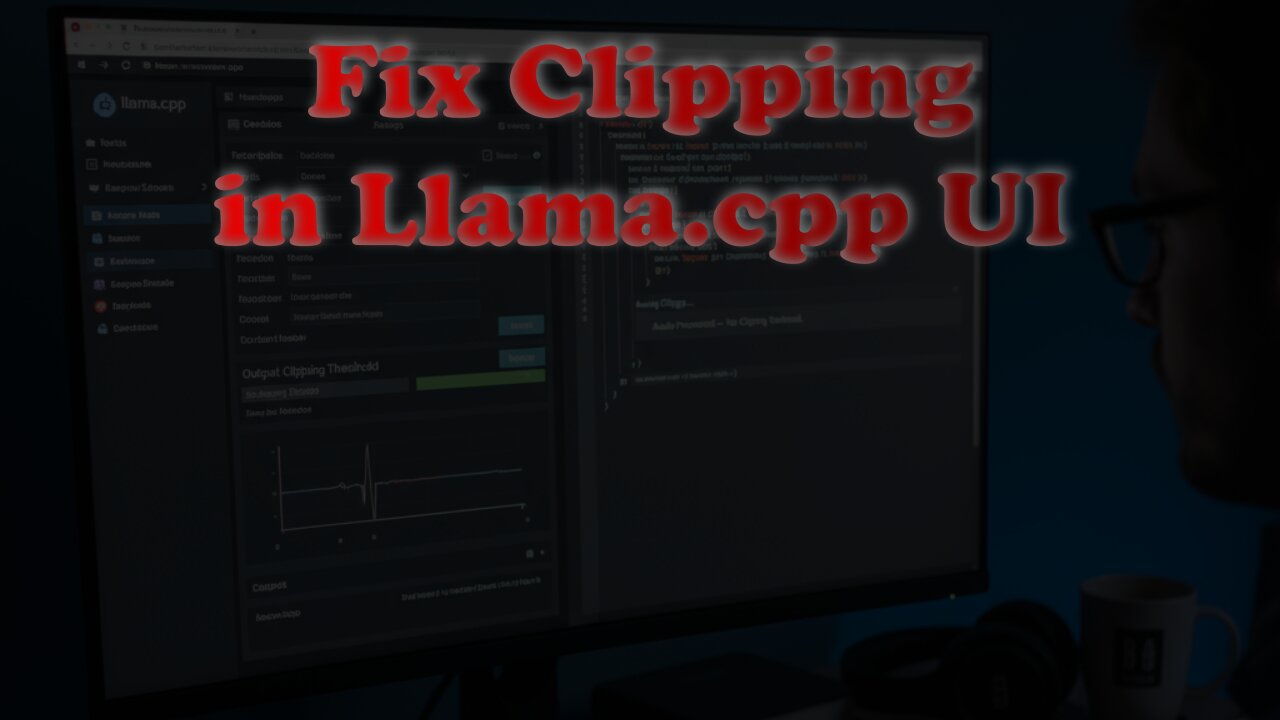

Customizing Web UI for Llama.cpp on AMD GPU: No Clipping, Better Output!

In this tutorial, we'll take the Web UI for Llama.cpp to the next level by customizing it to prevent clipping and enhance the conversation flow with both llama-2-7b-chat.Q8_0.gguf and DeepSeek-R1-Distill-Qwen-32B-Q5_K_S.gguf models, running on Linux with the AMD Instinct Mi60 32GB HBM2 GPU. In a previous video, we set up a base Web UI using default settings. You can find the earlier tutorial here: https://www.ojambo.com/web-ui-for-ai-deepseek-r1-32b-model

.

This time, the focus will be on fine-tuning settings to achieve better output quality and smoother performance, ensuring you get the best possible results when interacting with these powerful models. Additionally, we'll explore stable-diffusion.cpp, a potential alternative to ComfyUI that could offer unique benefits for your AI workflow.

Programming Resources:

Check out my programming books: https://www.amazon.com/stores/Edward-Ojambo/author/B0D94QM76N

Explore my programming courses: https://ojamboshop.com/product-category/course

Personalized Learning:

Interested in a one-on-one online programming tutorial? Learn more here: https://ojambo.com/contact

Need help with AI installation, migration, or custom solutions for chat, image, and video generation? Get in touch: https://ojamboservices.com/contact

Don't forget to Like, Subscribe, and Hit the Notification Bell so you don't miss out on future tutorials and deep dives into AI development!

-

1:04:43

1:04:43

OjamboShop

2 days agoLite XL 2.1.8 - The Fastest Open Source Code Editor for Linux and Developers!

111 -

26:34

26:34

Stephen Gardner

2 hours ago🔥Elon EXPOSES The Exact Blueprint Dems use to CHEAT on Joe Rogan!!

16.8K38 -

51:10

51:10

Dad Saves America

6 hours ago $0.36 earnedMask Off Mamdani: NYC’s Socialist “Savior” Is Another Spoiled Aristocrat

3.63K2 -

6:14

6:14

Buddy Brown

7 hours ago $1.61 earnedLaw Enforcement Caught SNOOPING on PRIVATE LAND! | Buddy Brown

4.66K11 -

16:21

16:21

Real Estate

1 month ago $0.28 earnedIt’s Not Just You..NO ONE CAN AFFORD KIDS ANYMORE

3.99K6 -

LIVE

LIVE

LFA TV

1 day agoLIVE & BREAKING NEWS! | MONDAY 11/3/25

1,100 watching -

1:11:07

1:11:07

vivafrei

3 hours agoFishing for Lawsuits! I Get Blocked Because Zohran is Anti-Gay? Halloween Terror Plot & MORE!

85.9K27 -

1:12:46

1:12:46

Russell Brand

5 hours agoWE’RE BACK! The Fight for Freedom Starts NOW - SF645

81.3K75 -

1:07:56

1:07:56

The Quartering

4 hours agoBen Shapiro Vs Tucker Carlson, Blackface Trick Works, Kash Patel Under Fire, Based Woman Vs Trans

69K28 -

1:35:28

1:35:28

The HotSeat With Todd Spears

2 hours agoEpisode 202: Ladies it's YOUR Battle too!

12.9K13