Premium Only Content

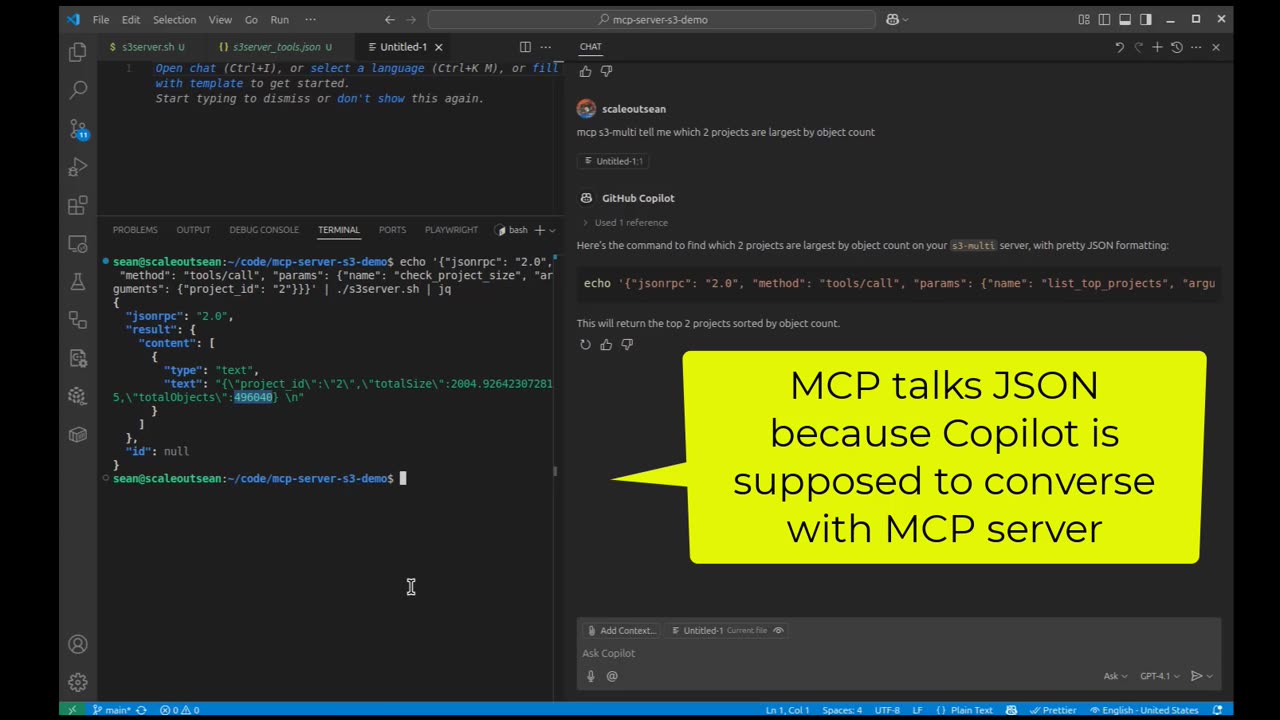

Getting extra information about S3 buckets on NetApp object storage

Correction: it's not just NetApp, but I don't want people to come here for AWS S3 and get pissed off. In reality the approach works with any S3-compatible storage - basically, if the bucket isn't huge, you may be able to list all objects every day or at least every week, and then either stuff that data into some logging platform (such as Loki) or store it in a database (e.g. InfluxDB).

The first part also highlights that - on ONTAP or StorageGRID - you can store Loki data on S3 as well, which is shown in the demo.

In the second half of this video I wanted to point out that having data in a DB makes it possible to avoid constant live "re-querying" (especially no bueno if you have buckets with many objects), but also that you can expose the same DB to any other app/front-end, rather than have the information just in your logs (here Loki).

I muse about that in this blog post. The same (or similar) applies to SMB and NFS. It's a bit longish or "ranty" if you will, but I was excited that this is actually feasible for buckets and shares far larger than I expected.

https://scaleoutsean.github.io/2025/06/05/simple-filesystem-and-s3-analytics-and-mcp.html

-

LIVE

LIVE

Joker Effect

40 minutes agoMASSIVE UPDATES ON MY CHANNEL... what does 2026 look like? CHATTIN WITH WVAGABOND (The Captain).

352 watching -

2:24:34

2:24:34

vivafrei

12 hours agoEp. 292: Bondi's Betrayal & Comey Judge Caught Lying! Crooks Acted Alone? Judicia Activism & MORE!

172K101 -

LIVE

LIVE

GritsGG

5 hours ago#1 Most Warzone Wins 4015+!

941 watching -

LIVE

LIVE

Due Dissidence

9 hours agoTrump SMITTEN By Mamdani, MTG RESIGNS, Hurwitz DOUBLES DOWN on CENSORSHIP, RFK Jr "Poetry" EXPOSED

1,323 watching -

39:40

39:40

Tactical Advisor

6 hours agoUnboxing New Tactical Packs | Vault Room Live Stream 046

65.8K6 -

3:30:58

3:30:58

elwolfpr

3 hours agoElWolfPRX Enters the Storm: First Winds

14.1K -

14:59

14:59

MetatronHistory

19 hours agoAncient Bronze Was Not the Way You Think

38.4K9 -

LIVE

LIVE

Misfit Electronic Gaming

5 hours ago $0.68 earned"LIVE" WolfPack hunting "ARC RAIDERS" Come Hang out with me.

89 watching -

5:36:21

5:36:21

DeadMomAlive

8 hours agoSuper Hero Sundays Wonder Woman! BIRTHDAY WEEK!!!!!

26.8K2 -

20:19

20:19

RiftTV

1 day agoKash Patel's GF Is Suing MAGA Influencers for Jokes & Memes | Amy Dangerfield

40.6K38