Premium Only Content

What is The Take It Down Act?

Before we begin follow me and watch the video entirely.

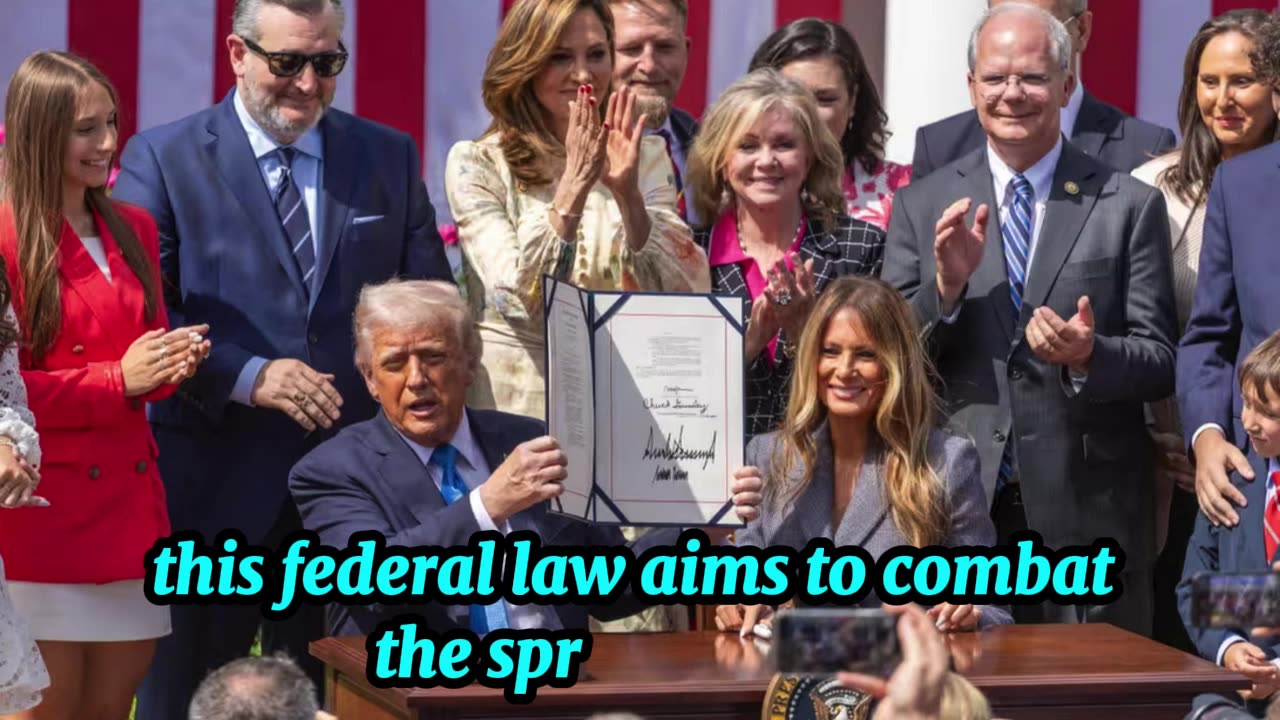

President Donald Trump signed the Take It Down Act into law on May 19, 2025. This federal law aims to combat the spread of non-consensual intimate images, including those generated by artificial intelligence (AI), commonly known as "deepfakes."

Criminalization of Non-Consensual Content: The Act makes it a federal crime to knowingly publish or threaten to publish intimate images without the subject's consent, encompassing both real and AI-generated content. Mandatory Removal by Platforms: Online platforms are required to remove such content within 48 hours of receiving a valid request from the victim. Failure to comply can result in fines of up to $50,000 per violation.

Enforcement Authority: The Federal Trade Commission (FTC) is empowered to enforce these provisions, ensuring that digital platforms adhere to the law's requirements.

The legislation received widespread bipartisan support. It was introduced by Senators Ted Cruz (R-TX) and Amy Klobuchar (D-MN), and co-sponsored in the House by Representatives María Elvira Salazar (R-FL) and Madeleine Dean (D-PA). First Lady Melania Trump also played a significant role in advocating for the bill, hosting a roundtable to raise awareness about the issue.

The Act is particularly focused on protecting minors and women, who are disproportionately affected by the non-consensual distribution of intimate images. It provides victims with legal avenues to seek the removal of such content and hold perpetrators accountable.

If you have not done so follow me and watch to the end.

If content is not taken down as required under the Take It Down Act (signed into U.S. law in May 2025), there are serious legal and financial consequences for platforms and protection options for victims. Under the Take It Down Act, online platforms must remove non-consensual intimate images (real or AI-generated) within 48 hours of receiving a valid removal request from the victim. If they fail to do so:

1. Hefty Fines. Platforms can be fined up to $50,000 per violation. Each piece of content that remains up counts as a separate violation.

2. FTC Enforcement. The Federal Trade Commission (FTC) has authority to investigate and enforce compliance. Repeat or willful violations can lead to legal action, consent decrees, or even platform bans.

What Victims Can Do. If a victim’s content isn’t taken down after a valid request:

1. File a Complaint with the FTC. Victims can report the platform directly to the FTC, which may initiate an investigation.

2. Pursue Civil Action. Victims can sue the platform or the perpetrator for damages, including emotional distress and reputational harm.

3. Report to Law Enforcement. If the content involves coercion, blackmail, minors, or deepfakes used for harm, criminal charges may apply (e.g., cyber exploitation, child sexual abuse laws).

Platforms That Don’t Comply. Platforms not acting within 48 hours risk: Losing public trust. Regulatory scrutiny. Being blacklisted or banned in certain jurisdictions. This concludes this topic. For more content, follow me.

-

3:49:59

3:49:59

Barry Cunningham

5 hours agoPAM BONDI & KRISTI NOEM HOST A PRESS CONFERENCE AND JOHN RICH JOINS THE SHOW!

11.3K8 -

1:01:50

1:01:50

BonginoReport

4 hours agoSeason 2 Of The Epstein Show Just Dropped - Nightly Scroll w/ Hayley Caronia (Ep.126) - 09/03/2025

60K27 -

1:01:42

1:01:42

The Nick DiPaolo Show Channel

5 hours agoUS Vaporizes Venezuelan Drug Boat | The Nick Di Paolo Show #1787

48.9K28 -

LIVE

LIVE

LFA TV

13 hours agoLFA TV ALL DAY STREAM - WEDNESDAY 9/3/25

817 watching -

19:44

19:44

Clownfish TV

12 hours agoMedia Blames MAGA for Literally Everything Now... | Clownfish TV

8755 -

6:39

6:39

DropItLikeItsScott

3 hours agoLocked In with the AIMWIN PE320 / The Red Dot That Won’t Quit

14 -

![PROSECUTOR PIRRO: Massive Drug Seizure Exposes China-Cartel Precursor Chemical Route [EP 4704]](https://1a-1791.com/video/fww1/c2/s8/1/O/H/Y/e/OHYez.0kob.2-small-PROSECUTOR-PIRRO-Massive-Dr.jpg) LIVE

LIVE

The Pete Santilli Show

2 hours agoPROSECUTOR PIRRO: Massive Drug Seizure Exposes China-Cartel Precursor Chemical Route [EP 4704]

130 watching -

1:09:48

1:09:48

Kim Iversen

4 hours agoEPSTEIN VICTIM: We Have The Names. We'll Make The List.

32.9K64 -

LIVE

LIVE

The Jimmy Dore Show

2 hours agoTrump’s HUGE About-Face on the COVID Vaxx! Epstein Victims Demand Justice In DC! w/ Mary Holland

8,477 watching -

47:52

47:52

The Mel K Show

2 hours agoLive Q&A With Mel K 9-3-25

6.58K1