Premium Only Content

Compiler From Scratch: Phase 1 - Tokenizer Generator 022: Resolving DFA state ambiguity

Streamed on 2024-12-13 (https://www.twitch.tv/thediscouragerofhesitancy)

Zero Dependencies Programming!

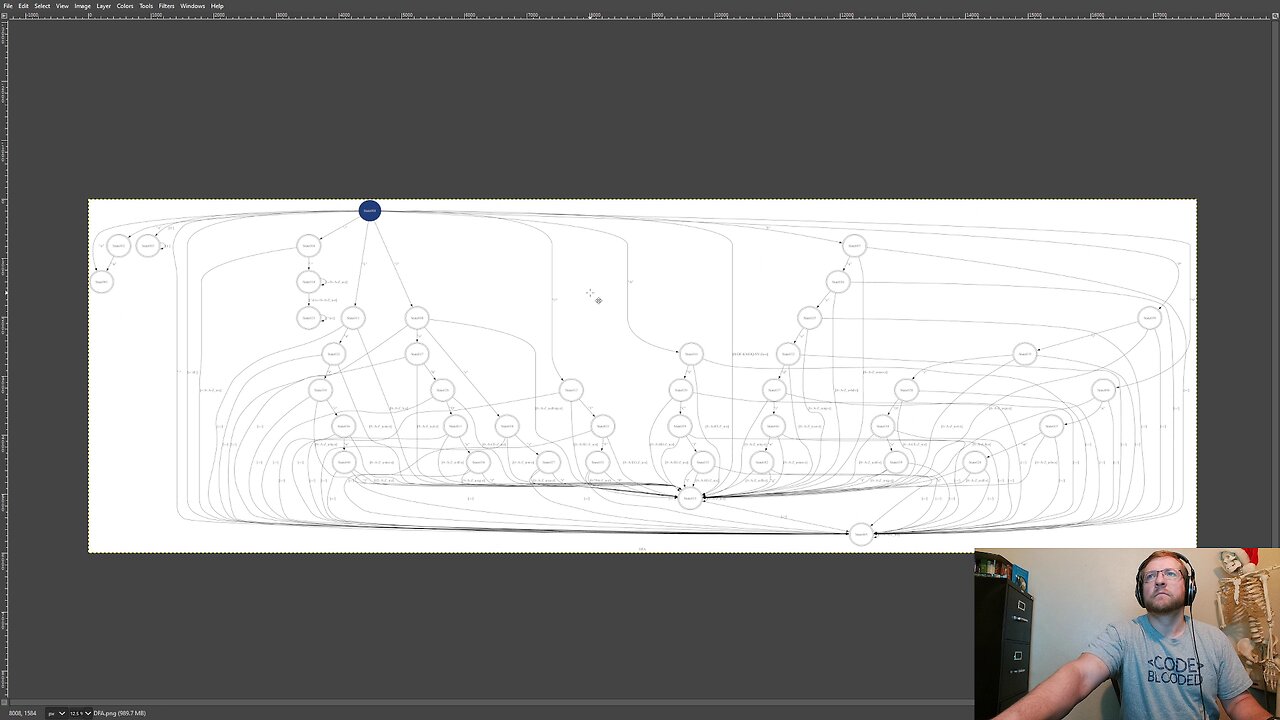

Last week we got stuck by the fact that DFAs can have overlapping transitions. In these cases the first test always wins and there is no way to jump off an invalid track onto another valid track once it starts down a track. So we fixed that today.

The trick is to think of each transition as a set of characters; a set in the mathematical sense. When processing the transitions we look for overlapping sets. If they overlap we compute:

1) Which characters are only in the first set

2) Which characters are only in the second set

3) Which characters are in both sets, and treat that as a new transition that follows the lowest rule number (rule order precedence).

Then add each of these three onto the unprocessed list for further checking. Once a transition makes it all the way through the unprocessed list without overlapping any other sets, this transition if put on the processed list. This process also has the added benefit of making the order we check the transitions in not matter at all. We can shuffle the transitions into any order, and since they don't overlap any more, they will all be checked eventually and not get cut off by an overlapping rule. Now the DFA looks much messier, but it is finally correct.

With that change done the testing of VVProject tokenizer proceeded. It didn't take much fiddling to get it the way we want. We had already done most of the plumbing into VVProject last week and with a bit of tweaking it was working just fine there as well.

I started down the road of making a tokenizer for the VVTokenizerDefinition, but got sidetracked thinking about multiple-encodings support in that tokenizer. I started down a dark road trying to make that work, but where VVProject calls VVTokenizerDefinition was where I found the problem: for the "Multi" encoding to work it would have to know the encoding when we generate the tokenizer itself. We can't switch that encoding behavior at tokenizer runtime with this system, only at tokenizer generation time. And the biggest problems is the REGEX tokenizing rule. That will have to support anything at tokenization time. I have a plan, but ran out of time by the time I had thought it through. We'll have to remove some of the work we did today, but that will have to wait for next week.

-

1:23:31

1:23:31

DeVory Darkins

3 hours agoBRUTAL moment Jeffries HUMILIATED by CNBC host regarding Obamacare

155K55 -

1:03:10

1:03:10

The Quartering

2 hours agoThere's An OnlyFans For Pedos, SNAP Bombshell & Big Annoucement

27.6K35 -

2:24:29

2:24:29

The Culture War with Tim Pool

4 hours agoMAGA Civil War, Identity Politics, Christianity, & the Woke Right DEBATE | The Culture War Podcast

195K146 -

2:20:13

2:20:13

Side Scrollers Podcast

4 hours agoVoice Actor VIRTUE SIGNAL at Award Show + Craig’s HORRIBLE Take + More | Side Scrollers

27.6K6 -

18:01

18:01

Bearing

8 hours agoThe Rise of DIGISEXUALS 🤖💦 Humanity Is Finished

5.13K21 -

LIVE

LIVE

Jeff Ahern

1 hour agoFriday Freak out with Jeff Ahern

97 watching -

1:59:21

1:59:21

The Charlie Kirk Show

3 hours agoCreeping Islamization + What Is An American? + AMA | Sedra, Hammer | 11.21.2025

60.2K24 -

1:08:27

1:08:27

Sean Unpaved

3 hours agoWill Caleb Williams & Bears WIN The NFC North? | UNPAVED

19.9K -

2:19:31

2:19:31

Lara Logan

5 hours agoSTOLEN ELECTIONS with Gary Berntsen & Ralph Pezzullo | Ep 45 | Going Rogue with Lara Logan

34.5K10 -

1:47:18

1:47:18

Steven Crowder

6 hours agoTo Execute or Not to Execute: Trump Flips the Dems Sedition Playbook Back at Them

328K323