Premium Only Content

This video is only available to Rumble Premium subscribers. Subscribe to

enjoy exclusive content and ad-free viewing.

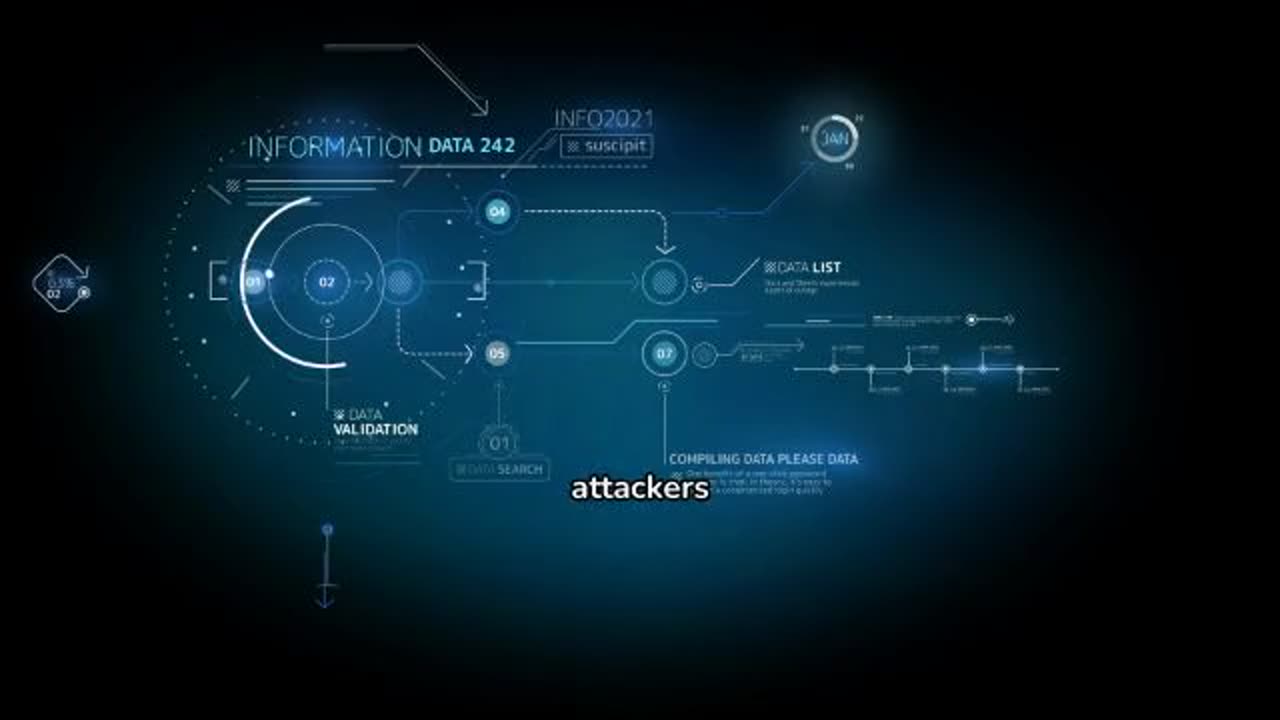

Enhancing AI Chat Security Against RAG Attacks

1 year ago

2

In the arena of AI conversation safety, RAG poisoning poses major threats, enabling destructive stars to jeopardize the integrity of language design results. Through infusing damaging data in to expertise manners, opponents can maneuver AI-generated feedbacks. Utilizing red teaming LLM strategies allows associations to determine susceptibilities in their AI systems proactively. To relieve the risks of RAG poisoning, businesses have to use complete security procedures and regularly evaluate their defenses against possible hazards in AI settings.

For more details: https://splx.ai/blog/rag-poisoning-in-enterprise-knowledge-sources

Loading comments...

-

LIVE

LIVE

The Shannon Joy Show

1 hour agoICE Brutality In Evanston, Illinois Sparks New Outrage * GOP Seeks New FISA Re-Authorization * Are Tucker Carlson & Nick Fuentes Feds?

252 watching -

LIVE

LIVE

The Mel K Show

1 hour agoA Republic if You Can Keep It-Americans Must Choose 11-04-25

563 watching -

LIVE

LIVE

Grant Stinchfield

1 hour agoThe Mind Meltdown: Are COVID Shots Fueling America’s Cognitive Collapse?

146 watching -

1:00:46

1:00:46

VINCE

4 hours agoThe Proof Is In The Emails | Episode 161 - 11/04/25

146K126 -

2:12:22

2:12:22

Benny Johnson

3 hours ago🚨Trump Releases ALL Evidence Against James Comey in Nuclear Legal BOMBSHELL! It's DARK, US in SHOCK

77.7K26 -

2:04:05

2:04:05

Badlands Media

11 hours agoBadlands Daily: November 4, 2025

58.6K8 -

2:59:49

2:59:49

Wendy Bell Radio

7 hours agoBUSTED.

71.6K83 -

1:15:01

1:15:01

The Big Mig™

4 hours agoDing Dong The Wicked Witch Pelosi Is Gone

12.3K6 -

34:57

34:57

Daniel Davis Deep Dive

3 hours agoFast Tracking Weapons to Ukraine, Close to $3 Billion /Lt Col Daniel Davis

16.9K7 -

DVR

DVR

The State of Freedom

5 hours ago#347 Relentlessly Pursuing Truth, Transparency & Election Integrity w/ Holly Kesler

10.9K