Premium Only Content

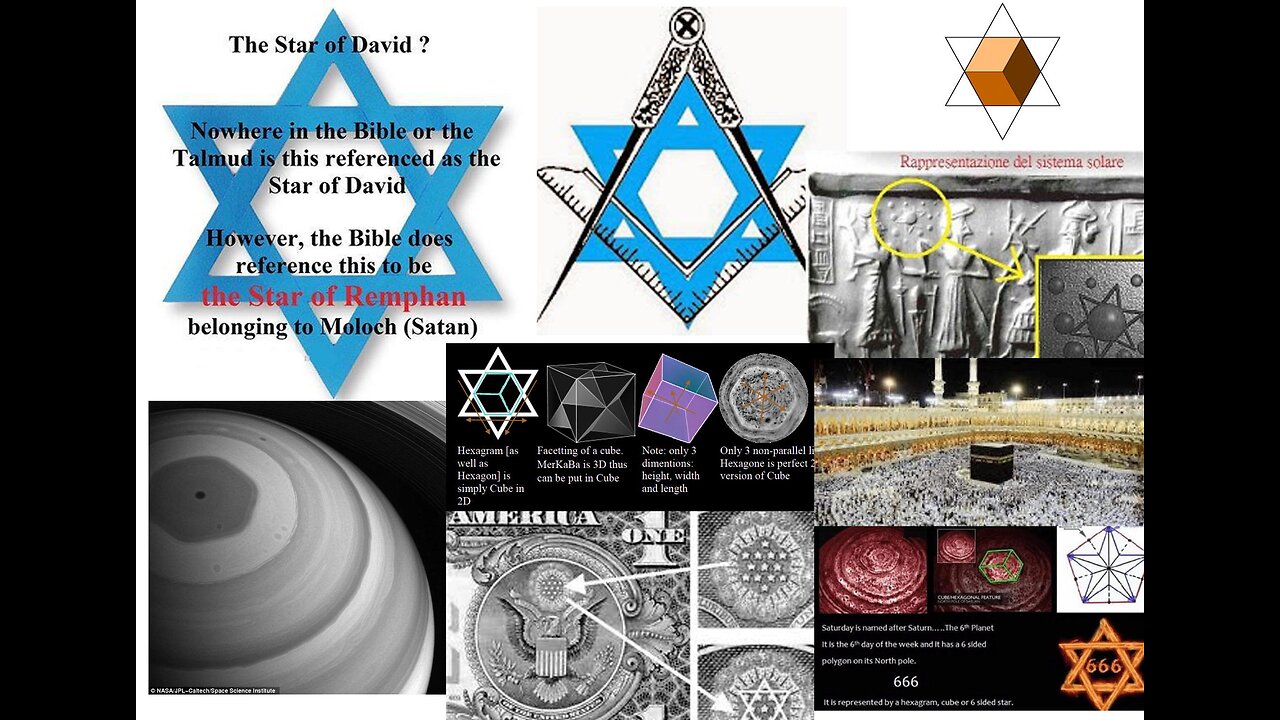

The West After the "good guys" Won WW2 - Current

We are told the "good guys" won in WW2, so what has happened to Europe and America since then and why? We have lived through a moral and racial degeneration since then in slow motion. The right blames the left and the left blames the right. BOTH are controlled by the same masters, the "victims" of WW2 and the "evil Nazis". Our history has been subverted and inverted by the same human looking rats. Time to wake up, Goy!

**FAIR USE**

Copyright Disclaimer under section 107 of the Copyright Act 1976, allowance is made for “fair use” for purposes such as criticism, comment, news reporting, teaching, scholarship, education and research.

Fair use is a use permitted by copyright statute that might otherwise be infringing.

Non-profit, educational or personal use tips the balance in favor of fair use.

-

LIVE

LIVE

Rebel News

44 minutes agoFord urges Poilievre to be liberal, Canada’s crime spikes, Quebec church burns | Rebel Roundtable

268 watching -

LIVE

LIVE

The Mel K Show

1 hour agoMORNINGS WITH MEL K -Our Kids Are Not Alright-The People Must Push Back Now! 7-18-25

970 watching -

LIVE

LIVE

Flyover Conservatives

12 hours agoThe Fountain of Youth? Red Light Therapy for Longer Life - Jonathan Otto | FOC Show

324 watching -

DVR

DVR

The Shannon Joy Show

19 hours ago🔥🔥URGENT! Dutch Medical Freedom Attorney Arno van Kessle Arrested And Held Without Charge - Live EXCLUSIVE With Jim Ferguson!🔥🔥

4.5K2 -

1:00:40

1:00:40

Dr. Eric Berg

4 days agoThe Dr. Berg Show LIVE July 18, 2025

9.99K3 -

44:40

44:40

The Rubin Report

3 hours agoThis Is Why Gavin Newsom Regrets Letting Shawn Ryan Interview Him with Co-Host Riley Gaines

50K39 -

22:11

22:11

The Official Corbett Report Rumble Channel

16 hours agoTrump Makes Ukraine Great Again (And Epstein Is A HOAX!!!) - New World Next Week

6.29K11 -

1:04:29

1:04:29

The Big Mig™

5 hours agoJerome Powell May Be Going To Jail

12.9K10 -

LIVE

LIVE

LFA TV

15 hours agoLFA TV ALL DAY STREAM - FRIDAY 7/18/25

3,104 watching -

1:32:45

1:32:45

Caleb Hammer

3 hours agoIt Finally Happened.. | Financial Audit

9.19K2