Premium Only Content

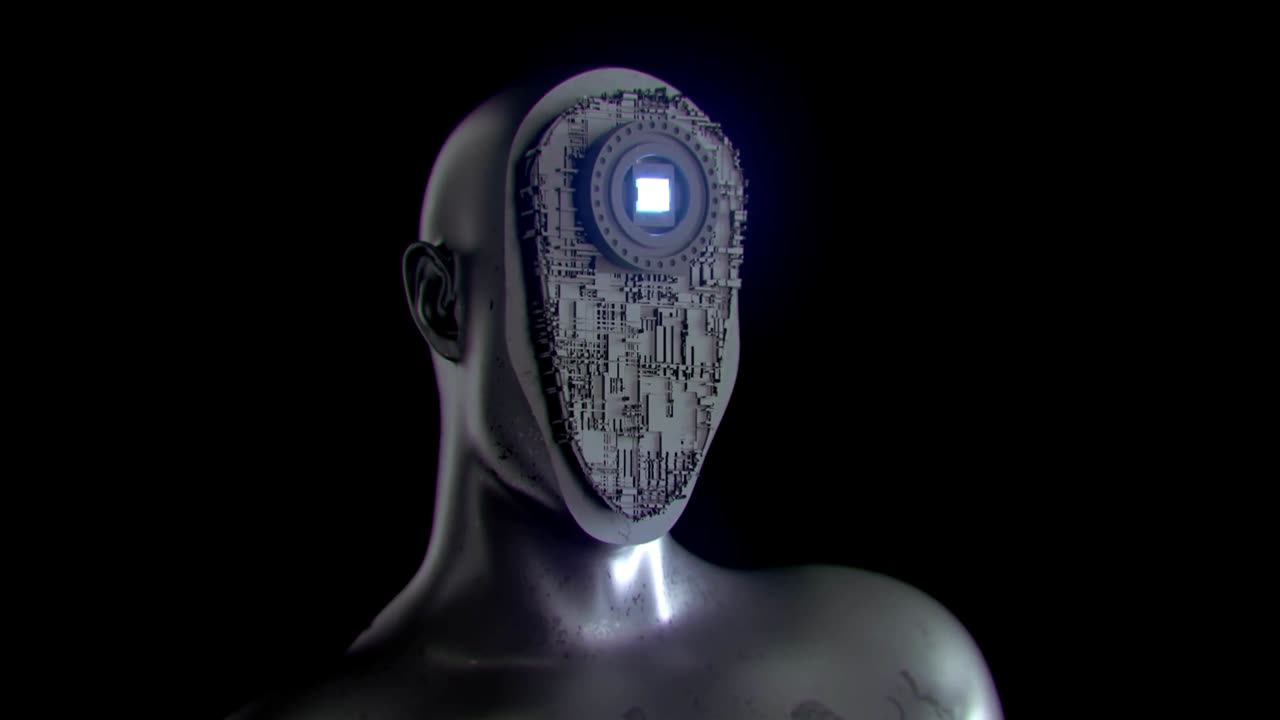

#Meta and #Nvidia recently published research on teaching #AImodels to interact with the #realworld

#Meta and #Nvidia recently published research on teaching #AImodels to interact with the #realworld through a simulated one. The real world is not only complex and messy, but also slow-moving, making it difficult for agents to learn to control robots and perform tasks like opening a drawer and putting something inside. To help these agents perform tasks more effectively, @Nvidia has added an additional layer of #automation by applying a large #languagemodel to help write reinforcement learning code. They call this the Evolution-driven Universal REward Kit for Agent (EUREKA).

The #Evolution-driven #Universal REward Kit for #Agent was found to be surprisingly good at outperforming #humans in the #effectiveness of the reward function. The team iterates on its own code, improving as it goes and helping it generalize to different applications. The pen trick above is only simulated, but it was created using far less human time and expertise than it would have taken without #EUREKA. Using the technique, #agents performed highly on a set of other virtual dexterity and locomotion tasks.

#Meta is also working on embodied #AI, introducing new habitats for future robot companions. It announced a new version of its "Habitat" dataset, which includes nearly photorealistic and carefully annotated 3D #environments that an AI agent could navigate around. This allows people or agents trained on what people do to get in the simulator with the robot and interact with it or the environment simultaneously. This is an important capability, as it allows the robot to learn to work with or around humans or human-esque agents.

@Meta also introduced a new #database of 3D interiors called HSSD-200, which improves the fidelity of the #environments. Training in around a hundred of these high-fidelity scenes produced better results than #training in 10,000 lower-fidelity ones. They also talked up a new robotics simulation stack, @HomeRobot, for @BostonDynamics' Spot and Hello Robot's Stretch. Their hope is that by standardizing some basic navigation and manipulation software, researchers in this area can focus on higher-level stuff where innovation is waiting.

#Habitat and #HomeRobot are available under an @MIT license at their @GitHub pages, and HSSD-200 is under a @CreativeCommons non-commercial license.

-

11:55

11:55

Upper Echelon Gamers

12 hours ago $0.11 earnedThe Malware Disaster on STEAM

257 -

25:35

25:35

Athlete & Artist Show

15 hours agoYOU WON'T FINISH THE GAME!

140 -

13:22

13:22

Silver Dragons

16 hours agoAre You Prepared for What SILVER Will Do Next?

8953 -

9:20

9:20

Adam Does Movies

18 hours ago $0.10 earnedIT: Welcome To Derry Episode 4 Recap - What An Eyesore

2481 -

LIVE

LIVE

LIVE WITH CHRIS'WORLD

10 hours agoTHE WAKE UP CALL - 11/24/2025 - Episode 12

340 watching -

LIVE

LIVE

BEK TV

2 days agoTrent Loos in the Morning - 11/24/2025

172 watching -

LIVE

LIVE

The Bubba Army

2 days agoF1'S NEWEST DRIVER? - Bubba the Love Sponge® Show | 11/24/25

1,673 watching -

19:15

19:15

Nikko Ortiz

19 hours agoOstrich Gets A Taste For Human Blood

55.2K16 -

32:42

32:42

MetatronHistory

1 day agoWas FASCISM Left wing or Right wing?

9.01K32 -

LIVE

LIVE

Flex011

5 hours ago $0.01 earnedFrom Scrap to Stronghold: Our Base is Live!

79 watching