Premium Only Content

CGI Vdeo and audio. How it works ?

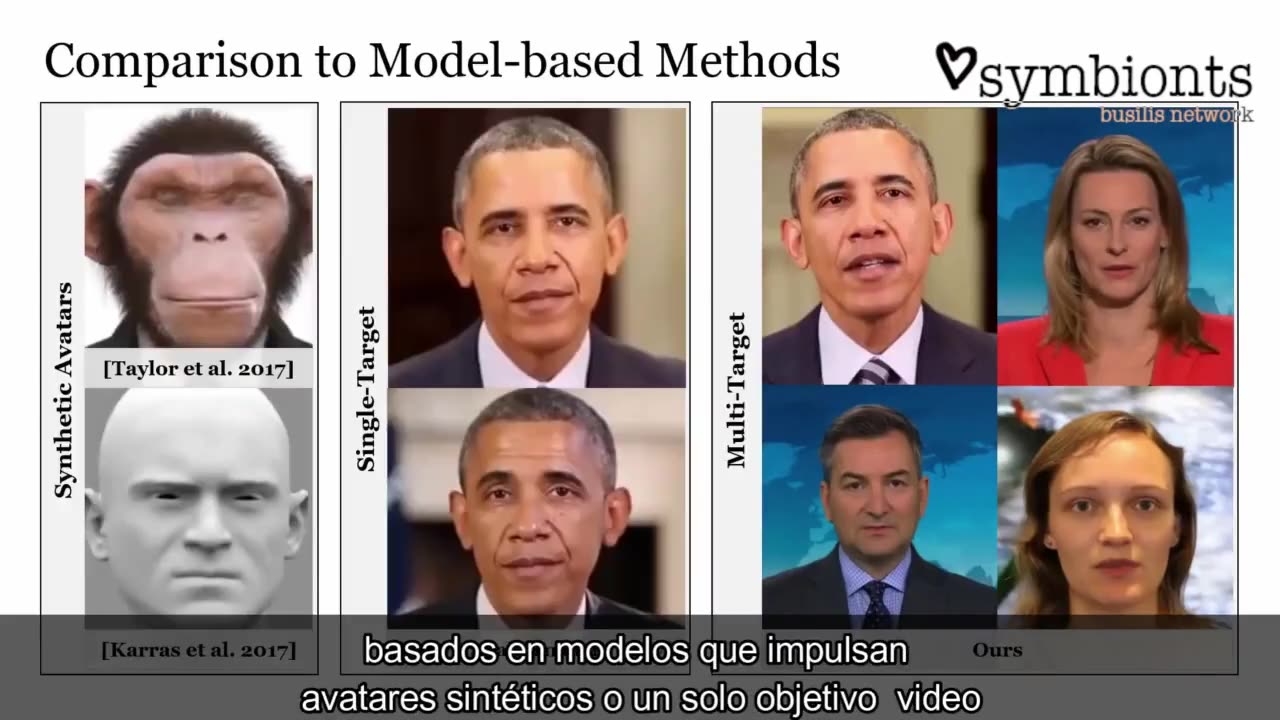

Given an audio sequence of a source person or digital assistant, we generate a photo-realistic output video of a target person that is in sync with the audio of the source input. This audio-driven facial reenactment is driven by a deep neural network that employs a latent 3D face model space. Through the underlying 3D representation, the model inherently learns temporal stability while we leverage neural rendering to generate photo-realistic output frames. This approach generalizes across different people, allowing to synthesize videos of a target actor with the voice of any unknown source actor or even synthetic voices that can be generated utilizing standard text-to-speech approaches. This has a variety of use-cases, including audio-driven video avatars, video dubbing, and text-driven video synthesis of a talking head. We demonstrate the capabilities of this method in a series of audio- and text-based puppetry examples. This method is not only more general than existing works since we are generic to the input person, but we also show superior visual and lip sync quality compared to photo-realistic audio- and video-driven reenactment techniques.

-

49:05

49:05

Game On!

20 hours ago $3.63 earnedA Champion has been CROWNED! Plus Masters 2025 Preview!

39.1K1 -

![Supreme Court (Not Barrett) Allows Trump Admin to Enforce Deportations of Gang Members [EP 4506-8AM]](https://1a-1791.com/video/fww1/1a/s8/1/1/w/Z/y/1wZyy.0kob.2-small-Supreme-Court-Not-Barrett-A.jpg) LIVE

LIVE

The Pete Santilli Show

4 days agoSupreme Court (Not Barrett) Allows Trump Admin to Enforce Deportations of Gang Members [EP 4506-8AM]

874 watching -

13:17

13:17

Clownfish TV

13 hours agoSnow White Only Made $5 MILLION...

45.4K45 -

4:50

4:50

SKAP ATTACK

16 hours ago $2.91 earnedLuka OWNS SGA

34.9K7 -

44:24

44:24

TheTapeLibrary

19 hours ago $4.92 earnedThe Strangest UFO Encounters Ever Recorded

52.7K4 -

21:36

21:36

JasminLaine

16 hours agoCarney MOCKS Danielle Smith—Clapback Leaves Him SPEECHLESS, Media Stays Silent

41.1K49 -

13:46

13:46

ThinkStory

20 hours agoTHE WHITE LOTUS Season 3 Ending Explained!

34.7K2 -

58:09

58:09

CarlCrusher

23 hours agoHunting for UFO Portals on Ancient Ley Lines

32.7K4 -

32:06

32:06

This Bahamian Gyal

17 hours agoGoing BROKE to look RICH | This Bahamian Gyal

35.5K5 -

26:47

26:47

Degenerate Plays

15 hours ago $0.96 earnedBatman Ended This Man's Whole Career - Gotham Knights : Part 46

26.3K2