Premium Only Content

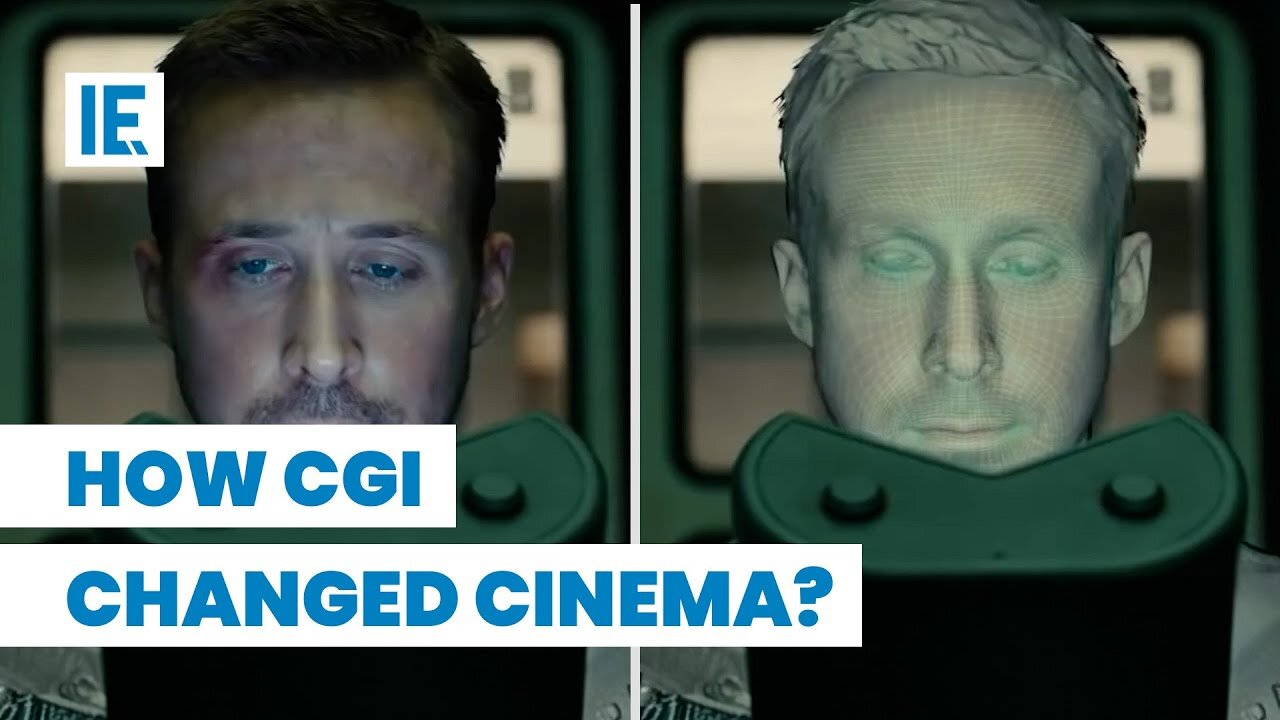

Is CGI Getting Worse?

The evolution of Computer-Generated Imagery (CGI) has revolutionized the film industry, enhancing visual storytelling by leaps and bounds. Despite a common misconception, CGI, when done right, can blend seamlessly with practical effects, blurring the boundary between the real and the computer-generated.

The use of CGI began modestly in 1958 with Alfred Hitchcock's "Vertigo", utilizing a WWII targeting computer and pendulum for its opening sequence's spiral effects. This laid the foundation for more sophisticated uses of CGI, such as in 1973's "Westworld", where the creators leveraged a technique inspired by Mariner 4's Mars imagery for a robotic perspective.

As technology advanced, films like 1982's "Tron" pushed the limits of visual effects, involving painstaking manual input of values for each animated object. The film failed to garner an Oscar due to the prejudiced view that using computers was 'cheating', highlighting the industry's initial resistance to CGI.

The advent of motion capturing technology in films such as "The Lord of the Rings", involving recording actors' movements via sensors, contributed to enhancing realism in CGI characters. CGI today is a complement rather than a replacement for practical effects, often used in compositing to blend live-action and CG elements for realistic scenes, as seen in 2021's "Dune".

Despite criticism, CGI is an essential filmmaking tool that has enriched modern storytelling, enabling visual effects artists to realistically render a filmmaker's vision on screen. CGI isn't taking away from cinema's magic, but rather adding to it, crafting experiences and stories beyond the limitations of practical effects.

Join our channel by clicking here: https://rumble.com/c/c-4163125

-

LIVE

LIVE

GritsGG

2 hours ago#1 Most Warzone Wins 3957+!

48 watching -

2:00:02

2:00:02

The Quartering

4 hours agoDemocrat Civil War After Collapse, Viral Wedding Ring Insanity, New Trump Pardons & Huge Trans Ban

138K53 -

LIVE

LIVE

ZWOGs

2 hours ago🔴LIVE IN 1440p! - ARC RAIDERS! Grinding XP and Upgrades! - Come Hang Out!

62 watching -

LIVE

LIVE

Meisters of Madness

41 minutes agoNinja Gaiden 4 - Quest for The Master Difficulty

26 watching -

25:52

25:52

Stephen Gardner

2 hours ago🔥Adam Schiff OBLITERATED by Trump's NEW EVIDENCE!

8.44K26 -

LIVE

LIVE

Dad Saves America

5 hours agoMamdani and Fuentes Have Captured Our Kids. Can We Get Them Back?

52 watching -

LIVE

LIVE

LFA TV

19 hours agoLIVE & BREAKING NEWS! | MONDAY 11/10/25

1,164 watching -

2:27

2:27

GreenMan Studio

2 hours agoAUSTRALIA A PSYOP?! W/Greenman Reports

16 -

1:16:02

1:16:02

Russell Brand

3 hours agoTHE TRUST CRISIS — Vaccines, Obama’s Image & Gates’ Agenda - SF648

110K9 -

LIVE

LIVE

Film Threat

19 hours agoVERSUS: SYDNEY SWEENEY VS. PREDATOR: BADLANDS | Film Threat Versus

158 watching