Premium Only Content

Warning AI And Quantum Computer Just Shut Down After It Revealed In This Video

Warning AI And Quantum Computer Have you ever wondered what could happen if we bring together AI and Quantum Computers? Would this combo destroy our planet or give us a better understanding of the universe? AI has already become advanced, and scientists are tirelessly working to develop Quantum Computers, but what could happen when AI and Quantum Computers join forces? Recently the US government has pushed Google and NASA to stop their Quantum Computer development efforts. Why? Because they have noticed something terrifying.

AI and Quantum computing is a technology that combines physics, mathematics, and computer science to solve complex problems. It was first proposed in the 1980s by Richard Feynman and Yuri Manin. Feynman discovered in 1982 that quantum computers could efficiently simulate quantum systems, and to date, there are no known efficient traditional algorithms in the problem area of quantum simulation. The big difference between classical computers and quantum computers is the use of quantum bits instead of the usual bits. Quantum computing is arguably the technology requiring the greatest paradigm shift on the part of developers. General interest and excitement in quantum computing was initially triggered by Peter Shor in 1994, who showed how a quantum algorithm could exponentially "speed-up" classical computation and factor large numbers into primes far more efficiently than any known classical algorithm.

The History of Quantum Computing You Need to Know Quantum computing is an area of computer science that explores the possibility of developing computer technologies based on the principles of quantum mechanics. It is still in its early stages but has already shown promise for significantly faster computation than is possible with classical computers. In this article, we’ll take a look at the history of quantum computing, from its earliest beginnings to the present day.

Why Bother Learning About Quantum Computing History?

History provides important context on how humanity has previously made scientific discoveries. There are many theories on precisely how scientific understanding has progressed over time but, however you view it, looking backwards does give us a fresh view on how to view the present.

Everything is theoretically impossible, until it is done. One could write a history of science in reverse by assembling the solemn pronouncements of highest authority about what could not be done and could never happen.

— Robert Heinlein, American Science Fiction Author

By placing the development of quantum computers in the wider context of the history of computing, I hope to provide the reader with a sense of the enormity of the engineering challenges that we overcome in the past and provide a moderated but positive view on the outlook for our collective ability to develop useful quantum computers. This is deliberately a rapid and non technical article.

SHORT HISTORY OF CLASSICAL COMPUTING 2022

Analytical Engine

If you really want to go back in time you need to go back to the concept of an abacus. However, the computer in the form we broadly recognize today stems from the work of Charles Babbage (1791-1871) and Ada Lovelace (1815-1852). Babbage designed the Analytical Engine, and whilst he didn’t finish building the device, it has since been proven that it would have worked. Indeed its logical structure is broadly the same as used by modern computers. Lovelace was the first to recognize that the machine had applications beyond pure calculation, and published the first algorithm intended to be carried out by such a machine. She is consequently widely regarded as the first to recognize the full potential of computers and one of the first computer programmers.

In 1890 Herman Hollerith designed a punch card system to calculate the 1880 census, accomplishing the task in just in record time and saving the government $5 million. He established the company that would ultimately become IBM.

In 1936 Alan Turing presented the idea of a universal machine, later called the Turing machine, capable of computing anything that is computable. The central concept of the modern computer was based on his ideas.

We provide a development of high level summary of the development of the core technology of computers following this, to provide a wider contextual lens on the development of quantum computing. Quantum Computing is seen by many as the next generation of computing. There are many ways of dividing up the eras of computing and the history of quantum computing, but we think this is most instructive.

Quantum Computing Timeline

Classical computers first used bits (zeros and ones) to represent information. These bits were first represented with physical switches and relay logic in the first electro-mechanical computers. These were enormous, loud feats of engineering and it was clear that a better way of representing bits were needed.

VACUUM TUBES (1940S – 1950S)

quantum vacuum tubes

Vacuum tubes were used in the late 1940s as a way of controlling electric current to represent bits. These were unwieldy, unreliable devices that overheated and required replacing. The first general – purpose digital computer, the Electronic Numerical Integrator and Computer (ENIAC) was built in 1943. The computer had 18,000 vacuum tubes. Computers of this generation could only perform single task, and they had no operating system.

TRANSISTORS (1950S ONWARDS)

transistor as a base of quantum computing

The first transistor ever assembled, invented by a group at Bell Labs in 1947

As anyone who still owns a valve guitar amp knows, vacuum tubes are rather temperamental beasts. They use a lot of energy, they glow and they regularly blow up. In the late 1940s the search was on for a better way of representing bits, which led to the development of transistors. In 1951 the first computer for commercial use was introduced to the public; the Universal Automatic Computer (UNIVAC 1). In 1953 the International Business Machine (IBM) 650 and 700 series computers made were released and (relatively) widely used.

INTEGRATED CIRCUITS (1960S TO 1970S)

integrated circuit kirby

The original integrated circuit of Jack Kilby

The invention of integrated circuits enabled smaller and more powerful computers. An integrated circuit is a set of electronic circuits on one small flat piece of semiconductor material (typically silicon). These were first developed and improved in the late 1950s and through the 1960s. Although Kilby’s integrated circuit (pictured) was revolutionary, it was fashioned out of germanium, not silicon. About six months after Kilby’s integrated circuit was first patented, Robert Noyce, who worked at Fairchild Semiconductor recognized the limitations of germanium and creating his own chip fashioned from silicon. Fairchild’s chips went on to be used in the Apollo missions, and indeed the moon landing.

MICROPROCESSORS AND BEYOND (1970S ONWARDS)

microprocessors before the quantum computers

Photos, left: Intel; right: Computer History Museum

In the 1970s the entire central processing unit started to be included on a single integrated circuit or chip and became known as microprocessors. These were a big step forward in facilitating the miniaturization of computers and led to the development of personal computers, or “PCs”.

Whilst processing power has continued to advance at a rapid pace since the 1970s, much of the development of the core processors are iterations on top of the core technology developed. Today’s computers are a story of further abstraction including the development of software and middleware and miniaturization (with the development of smaller and smaller microprocessors).

Further miniaturization of components has forced engineers to consider quantum mechanical effects. As chipmakers have added more transistors onto a chip, transistors have become smaller, and the distances between different transistors have decreased. Today, electronic barriers that were once thick enough to block current are now so thin enough that electrons can tunnel through them (known as quantum tunnelling). Though there are further ways we can increase computing power avoiding further miniaturization, scientists have looked to see if they can harness quantum mechanical effects to create different kinds of computers.

Quantum Computing Timeline

Quantum Computing Timeline is clearly constantly evolving and we welcome edits and additions.

Quantum Mechanics as a branch of physics began with a set of scientific discoveries in the late 19th Century and has been in active development ever since. Most people will point to the 1980s as the start of physicists actively looking at computing with quantum systems.

1982: History of quantum computing starts with Richard Feynman lectures on the potential advantages of computing with quantum systems.

1985: David Deutsch publishes the idea of a “universal quantum computer”

1994: Peter Shor presents an algorithm that can efficiently find the factors of large numbers, significantly outperforming the best classical algorithm and theoretically putting the underpinning of modern encryption at risk (referred to now as Shor’s algorithm).

1996: Lov Grover presents an algorithm for quantum computers that would be more efficient for searching databases (referred to now as Grove’s search algorithm)

1996: Seth Lloyd proposes a quantum algorithm which can simulate quantum-mechanical systems

1999: D-Wave Systems founded by Geordie Rose

2000: Eddie Farhi at MIT develops idea for adiabatic quantum computing

2001: IBM and Stanford University publish the first implementation of Shor’s algorithm, factoring 15 into its prime factors on a 7-qubit processor.

2010: D-Wave One: first commercial quantum computer released (annealer)

2016: IBM makes quantum computing available on IBM Cloud

2019: Google claims the achievement of quantum supremacy. Quantum Supremacy was termed by John Preskill in 2012 to describe when quantum systems could perform tasks surpassing those in the classical world.

Today’s quantum computers are described by some as sitting around the vacuum tube era (or before) as we outlined above in the previous section. This is because we are only just at the cusp of making useful and scalable quantum computers. This stage of development means that its not straightforward to decide on how to invest in the technology, and we cover this in the following article.

When I was researching and writing this piece, two things struck me. Firstly, the history of computers has not been a clear, linear progression over the last 100 or so years. Rather it has relied on big leaps that were hard to a priori envision. Secondly, whilst we try and steer away from straight out hype at TQD, we recognize that the large engineering feats of the 1940s are a far cry from the computers we have now, driven forward by individuals with the backing of smaller budgets than we benefit from today. It leaves us quietly confident that, though the development of quantum computers will be far from clear sailing, there is a future where the steampunk chandeliers (thanks Bob Sutor) we see today will be the only the first steps in a fascinating story of scientific development.

Superposition Principle Introduction The principle of superposition (also called the superposition property) states that in any linear system, the net response evoked by two or more stimuli is the sum of the responses that would be evoked by each stimulus individually. says. So if input A produces response X and input B produces response Y, then input (A + B) produces response (X + Y). function F. ( X ) {\displaystyle F(x)} A function that satisfies the superposition principle is called a linear function. Superposition can be defined by two simple properties: Additivity and uniformity for a scalar a. This principle has many applications in physics and engineering, since many physical systems can be modeled as linear systems. For example, beams can be modeled as linear systems. In this case, the input stimulus is the beam load and the output response is the beam deflection. The importance of linear systems is that they are easy to analyze mathematically. There are many mathematical methods that can be applied, frequency-domain linear transformation methods such as Fourier and Laplace transforms, and linear operator theory. Physical systems are generally only approximately linear, so the principle of superposition is only an approximation of actual physical behavior. The principle of superposition applies to any linear system, including algebraic equations, linear differential equations, and systems of equations of these forms. Stimuli and responses can be numbers, functions, vectors, vector fields, time-varying signals, or other objects that satisfy certain axioms. Note that when vectors or vector fields are involved, the superposition is interpreted as the sum of the vectors. When superposition holds, it automatically holds for all linear operations (by definition) applied to these functions, such as gradients, derivatives, and integrals (if any).

Relation to Fourier analysis and similar methods

Describing a very general stimulus (in a linear system) as a superposition of certain simple forms of stimuli often makes it easier to compute the response. For example, Fourier analysis describes the stimulus as a superposition of an infinite number of sinusoids. The principle of superposition allows each of these sinusoids to be analyzed separately and its individual response to be calculated. (The response itself is a sinusoid, having the same frequency as the stimulus, but usually different amplitude and phase.) According to the principle of superposition, the response to the original stimulus is the sum of all the individual sinusoidal responses. sum (or integral). . Another common example, in Green's function analysis, the stimulus is described as a superposition of infinitely many impulse functions, and the response is a superposition of impulse responses. Fourier analysis is especially popular for waves. For example, electromagnetic theory describes ordinary light as a superposition of plane waves (waves of fixed frequency, polarization, and direction). As long as the principle of superposition holds (not always, see nonlinear optics), the behavior of any light wave can be understood as a superposition of these simple plane wave behaviors.

Wave superposition

Waves are usually described by the variation of some parameters over space and time. For example, the wave height of water, the pressure of sound waves, the electromagnetic field of light waves, etc. The value of this parameter is called the wave amplitude, and the wave itself is a function specifying the amplitude of each point. For any system containing waves, the waveform at a given time is a function of the source (that is, if there is an external force that produces or affects the wave) and the initial conditions of the system. In many cases (such as the classical wave equation) the equations describing the waves are linear. If this is true, we can apply the principle of superposition. This means that the net amplitude caused by two or more waves traversing the same space is the sum of the amplitudes produced by the individual waves individually. For example, two waves traveling towards each other will pass through each other without distortion on the opposite side. (See image above.)

= Wave diffraction vs. wave interference =

Regarding wave superposition, Richard Feynman writes: No one has been able to satisfactorily define the difference between interference and diffraction. This is just a matter of usage, there is no concrete and significant physical difference between them. Roughly speaking, when there are only a few sources of interference, say two, the result is usually called interference, but when there are many, the term diffraction seems to be used. is more commonly used. Another author elaborates: The difference is convenience and convention. When the waves to be superimposed originate from several coherent sources, say two, the effect is called interference. On the other hand, when the superimposing waves are caused by splitting the wavefront into infinitesimal coherent wavelets (sources), the effect is called diffraction. This means that the difference between the two phenomena is a matter of degree, and that they are essentially two exclusive cases of superposition effects. Yet another source agrees: This chapter [Fraunhofer diffraction] is a continuation of chapter 8 [Interference], since the fringes observed by Young are double-slit diffraction patterns. On the other hand, few opticians think of the Michelson interferometer as an example of diffraction. To some extent, Feynman's observations reflect the difficulty in distinguishing between amplitude splitting and wavefront splitting, as several important categories of diffraction are related to interference with wavefront splitting.

= Wave interference =

The phenomenon of interference between waves is based on this idea. If two or more waves traverse the same space, the net amplitude at each point is the sum of the individual wave amplitudes. In some cases, such as noise-cancelling headphones, the amplitude of the summed fluctuations is smaller than the component fluctuations. This is called destructive interference. In other cases, such as line arrays, the amplitude of the summed variation is larger than the individual amplitudes of the components. This is called constructive interference.

= Departures from linearity =

In most realistic physical situations, the equations governing waves are only approximately linear. In such situations, the principle of superposition holds only approximately. In general, the accuracy of the approximation tends to improve as the wave amplitude decreases. See the Nonlinear Optics and Nonlinear Acoustics articles for examples of phenomena that occur when the principle of superposition is not exactly true.

= Quantum superposition =

A major task in quantum mechanics is to calculate how certain types of waves propagate and behave. Waves are described by wave functions, and the equation that governs their behavior is called the Schrödinger equation. The main approach to computing the behavior of a wavefunction is to compute the wavefunction as a superposition of a particular class (possibly infinite) of other wavefunctions, steady-state ones whose behavior is particularly simple (called a "quantum superposition"). is to describe Since the Schrödinger equation is linear, the behavior of the original wavefunction can thus be calculated by the superposition principle. The projective nature of the quantum mechanical state space causes some confusion. Because the quantum mechanical state is a ray in projective Hilbert space. , is not a vector. According to Dirac, "If you multiply a ket vector corresponding to a state by any complex number instead of zero, the resulting ket vector corresponds to the same state [in italics]." However, the sum of the two rays that make up the superimposed ray is undefined. As a result, Dirac himself Decompose or split using the ket vector representation of the states. For example, the ket vector | ψ I ⟩ {\displaystyle |\psi _{i}\rangle } Superposition of component ket vectors | ϕ j ⟩ {\displaystyle |\phi _{j}\rangle } As: where C. j ∈ C. {\displaystyle C_{j}\in {\textbf {C}}} . equivalence class of | ψ I ⟩ {\displaystyle |\psi _{i}\rangle } can give a well-defined meaning to the relative phase of C. j {\displaystyle C_{j}} ., but absolute (same amount) for all C. j {\displaystyle C_{j}} ) phase change C. j {\displaystyle C_{j}} It does not affect the equivalence class of | ψ I ⟩ {\displaystyle |\psi _{i}\rangle } . There is an exact correspondence between the superposition and the quantum superposition shown in the main of this page. For example, the block sphere representing the pure states of a two-level quantum mechanical system (qubits) are also known as Poincare spheres, which represent different types of classicals. pure polarization state. Nevertheless, on the topic of quantum superposition, Kramers writes: "The [quantum] superposition principle … has no analogue in classical physics." According to Dirac, "the superposition that occurs in quantum mechanics is of a fundamentally different nature than the superposition that occurs in classical theory". Dirac's reasoning involves the atomicity of observations, which is valid, but as regards phase, These actually mean the phase transform symmetries derived from the time transform symmetries. It can also be applied to classical states, as shown above for classical polarization states.

Boundary value problems

A common type of boundary value problem is finding a function y that (abstractly speaking) satisfies some equation. with some bounds For example, in Laplace's equation with Dirichlet boundary conditions, F is the Laplacian operator over the region R, G is the operator that constrains y to the bounds of R, and z is the function that requires y to be equal over the region R. . Boundary of R. If both F and G are linear operators, then by the principle of superposition we say that the superposition of the solution of the first equation is another solution of the first equation. Boundary values, on the other hand, overlap. Using these facts, if we can compile a list of solutions to the first equation, we can carefully superimpose these solutions so that they satisfy the second equation. This is one of the common ways to approach boundary value problems.

Additive state decomposition

Consider a simple linear system. By the principle of superposition, the system can be decomposed as follows: and The superposition principle is only available for linear systems. However, additive state decomposition is applicable to both linear and nonlinear systems. Now consider a nonlinear system. X ˙ = a X + B. ( you 1 + you 2 ) + ϕ ( c T. X ) , X ( 0 ) = X 0 . {\displaystyle {\dot {x}}=Ax+B(u_{1}+u_{2})+\phi (c^{T}x),x(0)=x_{0}.} where ϕ {\displaystyle \phi} is a nonlinear function. With additive state decomposition, the system can be additively decomposed as and This decomposition helps simplify controller design.

Other example applications

In a linear circuit in electrical engineering, an input (applied time-varying voltage signal) is related to an output (current or voltage anywhere in the circuit) by a linear transformation. So the superposition (i.e. summation) of the input signals gives the superposition of the responses. The use of Fourier analysis based on this is particularly common. For another related technique in circuit analysis, see the superposition theorem. In physics, Maxwell's equations mean that the (possibly time-varying) distributions of charge and current are related to electric and magnetic fields by linear transformations. Therefore, the principle of superposition can be used to simplify the calculation of the field resulting from a given charge and current distribution. This principle also applies to other linear differential equations that arise in physics, such as the heat equation. In engineering, superposition is the combination of loads when the effects are linear (that is, when each load does not affect the results of the other loads and the effect of each load does not significantly change the shape of the structure). Used to solve deflections in beams and structures. structural system). Modal superposition methods use eigenfrequencies and mode shapes to characterize the dynamic response of linear structures. In hydrogeology, the principle of superposition applies to the intake of two or more wells pumped into an ideal aquifer. This principle is used in analytical element methods to develop analytical elements that can be combined into a single model. In process control, the superposition principle is used for model predictive control. The superposition principle can be applied to analyze small deviations from a known solution to a nonlinear system by linearization. In music, the theorist Joseph Schillinger used a form of the superposition principle as one of the foundations of rhythm theory in his system of composition, the "Shillinger system". In computing, the superposition of multiple code paths, code and data, or multiple data structures can be found in shared memory and fat binaries, as well as highly optimized self-modifying code and instructions in executable text. Duplication may be seen.

History

According to Léon Brillouin, the principle of superposition was first stated by Daniel Bernoulli in 1753, stating that "the general motion of a vibrating system is given by the superposition of its proper vibrations". This principle was rejected by Leonhard Euler and later by Joseph Lagrange. Bernoulli argued that acoustic bodies can vibrate in a set of simple modes with well-defined vibrational frequencies. As he has shown before, these modes can be superimposed to generate more complex oscillations. In his response to Bernoulli's memoirs, Euler praised his colleague for best developing the physical part of the string vibration problem, but denied the generality and superiority of the multimodal solution. Later, mainly through the work of Joseph Fourier, the idea became accepted. .

Why Quantum Computing Is Even More Dangerous Than Artificial Intelligence and world already failed to regulate AI. Let’s not repeat that epic mistake.

Today’s artificial intelligence is as self-aware as a paper clip. Despite the hype—such as a Google engineer’s bizarre claim that his company’s AI system had “come to life” and Tesla CEO Elon Musk’s tweet predicting that computers will have human intelligence by 2029—the technology still fails at simple everyday tasks. That includes driving vehicles, especially when confronted by unexpected circumstances that require even the tiniest shred of human intuition or thinking.

The sensationalism surrounding AI is not surprising, considering that Musk himself had warned that the technology could become humanity’s “biggest existential threat” if governments don’t regulate it. But whether or not computers ever attain human-like intelligence, the world has already summoned a different, equally destructive AI demon: Precisely because today’s AI is little more than a brute, unintelligent system for automating decisions using algorithms and other technologies that crunch superhuman amounts of data, its widespread use by governments and companies to surveil public spaces, monitor social media, create deepfakes, and unleash autonomous lethal weapons has become dangerous to humanity.

Compounding the danger is the lack of any AI regulation. Instead, unaccountable technology conglomerates, such as Google and Meta, have assumed the roles of judge and jury in all things AI. They are silencing dissenting voices, including their own engineers who warn of the dangers.

The world’s failure to rein in the demon of AI—or rather, the crude technologies masquerading as such—should serve to be a profound warning. There is an even more powerful emerging technology with the potential to wreak havoc, especially if it is combined with AI: quantum computing. We urgently need to understand this technology’s potential impact, regulate it, and prevent it from getting into the wrong hands before it is too late. The world must not repeat the mistakes it made by refusing to regulate AI.

Although still in its infancy, quantum computing operates on a very different basis from today’s semiconductor-based computers. If the various projects being pursued around the world succeed, these machines will be immensely powerful, performing tasks in seconds that would take conventional computers millions of years to conduct.

Semiconductors represent information as a series of 1s and 0s—that’s why we call it digital technology. Quantum computers, on the other hand, use a unit of computing called a qubit. A qubit can hold values of 1 and 0 simultaneously by incorporating a counterintuitive property in quantum physics called superposition. (If you find this confusing, you’re in good company—it can be hard to grasp even for experienced engineers.) Thus, two qubits could represent the sequences 1-0, 1-1, 0-1, and 0-0, all in parallel and all at the same instant. That allows a vast increase in computing power, which grows exponentially with each additional qubit.

If quantum physics leaves the experimental stage and makes it into everyday applications, it will find many uses and change many aspects of life. With their power to quickly crunch immense amounts of data that would overwhelm any of today’s systems, quantum computers could potentially enable better weather forecasting, financial analysis, logistics planning, space research, and drug discovery. Some actors will very likely use them for nefarious purposes, compromising bank records, private communications, and passwords on every digital computer in the world. Today’s cryptography encodes data in large combinations of numbers that are impossible to crack within a reasonable time using classic digital technology. But quantum computers—taking advantage of quantum mechanical phenomena, such as superposition, entanglement, and uncertainty—may potentially be able to try out combinations so rapidly that they could crack encryptions by brute force almost instantaneously.

To be clear, quantum computing is still in an embryonic stage—though where, exactly, we can only guess. Because of the technology’s immense potential power and revolutionary applications, quantum computing projects are likely part of defense and other government research already. This kind of research is shrouded in secrecy, and there are a lot of claims and speculation about milestones being reached. China, France, Russia, Germany, the Netherlands, Britain, Canada, and India are known to be pursuing projects. In the United States, contenders include IBM, Google, Intel, and Microsoft as well as various start-ups, defense contractors, and universities.

Despite the lack of publicity, there have been credible demonstrations of some basic applications, including quantum sensors able to detect and measure electromagnetic signals. One such sensor was used to precisely measure Earth’s magnetic field from the International Space Station.

In another experiment, Dutch researchers teleported quantum information across a rudimentary quantum communication network. Instead of using conventional optical fibers, the scientists used three small quantum processors to instantly transfer quantum bits from a sender to a receiver. These experiments haven’t shown practical applications yet, but they could lay the groundwork for a future quantum internet, where quantum data can be securely transported across a network of quantum computers faster than the speed of light. So far, that’s only been possible in the realm of science fiction.

The Biden administration considers the risk of losing the quantum computing race imminent and dire enough that it issued two presidential directives in May: one to place the National Quantum Initiative advisory committee directly under the authority of the White House and another to direct government agencies to ensure U.S. leadership in quantum computing while mitigating the potential security risks quantum computing poses to cryptographic systems.

Experiments are also working to combine quantum computing with AI to transcend traditional computers’ limits. Today, large machine-learning models take months to train on digital computers because of the vast number of calculations that must be performed—OpenAI’s GPT-3, for example, has 175 billion parameters. When these models grow into the trillions of parameters—a requirement for today’s dumb AI to become smart—they will take even longer to train. Quantum computers could greatly accelerate this process while also using less energy and space. In March 2020, Google launched TensorFlow Quantum, one of the first quantum-AI hybrid platforms that takes the search for patterns and anomalies in huge amounts of data to the next level. Combined with quantum computing, AI could, in theory, lead to even more revolutionary outcomes than the AI sentience that critics have been warning about.

Given the potential scope and capabilities of quantum technology, it is absolutely crucial not to repeat the mistakes made with AI—where regulatory failure has given the world algorithmic bias that hypercharges human prejudices, social media that favors conspiracy theories, and attacks on the institutions of democracy fueled by AI-generated fake news and social media posts. The dangers lie in the machine’s ability to make decisions autonomously, with flaws in the computer code resulting in unanticipated, often detrimental, outcomes. In 2021, the quantum community issued a call for action to urgently address these concerns. In addition, critical public and private intellectual property on quantum-enabling technologies must be protected from theft and abuse by the United States’ adversaries.

There are national defense issues involved as well. In security technology circles, the holy grail is what’s called a cryptanalytically relevant quantum computer—a system capable of breaking much of the public-key cryptography that digital systems around the world use, which would enable blockchain cracking, for example. That’s a very dangerous capability to have in the hands of an adversarial regime.

Experts warn that China appears to have a lead in various areas of quantum technology, such as quantum networks and quantum processors. Two of the world’s most powerful quantum computers were built in China, and as far back as 2017, scientists at the University of Science and Technology of China in Hefei built the world’s first quantum communication network using advanced satellites. To be sure, these publicly disclosed projects are scientific machines to prove the concept, with relatively little bearing on the future viability of quantum computing. However, knowing that all governments are pursuing the technology simply to prevent an adversary from being first, these Chinese successes could well indicate an advantage over the United States and the rest of the West.

Beyond accelerating research, targeted controls on developers, users, and exports should therefore be implemented without delay. Patents, trade secrets, and related intellectual property rights should be tightly secured—a return to the kind of technology control that was a major element of security policy during the Cold War. The revolutionary potential of quantum computing raises the risks associated with intellectual property theft by China and other countries to a new level.

Finally, to avoid the ethical problems that went so horribly wrong with AI and machine learning, democratic nations need to institute controls that both correspond to the power of the technology as well as respect democratic values, human rights, and fundamental freedoms. Governments must urgently begin to think about regulations, standards, and responsible uses—and learn from the way countries handled or mishandled other revolutionary technologies, including AI, nanotechnology, biotechnology, semiconductors, and nuclear fission. The United States and other democratic nations must not make the same mistake they made with AI—and prepare for tomorrow’s quantum era today.

Every week scientists around the globe seem to announce another breakthrough that could help lead to the construction of a practical quantum computer. For individuals who have an interest in the subject, but not an intimate working knowledge of the field, the terms used in articles and press releases can be as confusing as the quantum subjects they address. Below are a few of the terms that I’ve run across over the past several years that keep popping up as we get closer to building that elusive quantum computer. Some terms are fairly familiar, like absolute zero, but others are not so well known, like Xmon. This list certainly isn’t exhaustive, but it’s a start to understanding some of the terms that may one day be as common as “bytes” and “chips.”

Absolute Zero — Absolute zero is the lowest temperature that is theoretically possible, at which the motion of particles that constitutes heat would be minimal. It is zero on the Kelvin scale, equivalent to –273.15°C or –459.67°F. In order to increase stability, most quantum computing systems operate at temperatures near absolute zero.

Algorithm — An algorithm is the specification of a precise set of instructions that can be mechanically applied to yield the solution to any given instance of a problem.

Anyon — An anyon is an elementary particle or particle-like excitation having properties intermediate between those of bosons and fermions. The anyon is sometimes referred to as a Majorana fermion but a more appropriate term to apply to the anyon is a particle in a Majorana bound state or in a Majorana zero mode. This name is more appropriate than Majorana fermion because the statistics of these objects is no longer fermionic. Instead, the Majorana bound states are an example of non-abelian anyons: interchanging them changes the state of the system in a way that depends only on the order in which the exchange was performed. The non-abelian statistics that Majorana bound states possess allows them to be used as a building block for a topological quantum computer.

Bose-Einstein Condensate — The Bose-Einstein Condensate is a super-cold cloud of atoms that behaves like a single atom that was predicted by Albert Einstein and Indian theorist Satyendra Nath Bose. Peter Engels, a researcher at Washington State University, explains, “This large group of atoms does not behave like a bunch of balls in a bucket. It behaves as one big super-atom. Therefore it magnifies the effects of quantum mechanics.” Theoretically, a Bose-Einstein condensate (BEC) can act as a stable qubit.

Coherence Time — Coherence time is the length of time a quantum superposition state can survive.

Doped Diamonds — A doped diamond is one into which a defect has been intentionally added. Physicists have discovered that they can can make good use of these defects to manipulating the spin of quantum particles.

Entanglement — Entanglement is a physical phenomenon that occurs when pairs or groups of particles are generated or interact in ways such that the quantum state of each particle cannot be described independently. If you think that is difficult to understand, you’re not alone. Albert Einstein referred to this phenomenon as “spooky action at a distance.”

’Fault-tolerant’ Material —Fault tolerant materials are a unique class of advanced materials that are electrically insulating on the inside but conducting on the surface. Inducing high-temperature superconductivity on the surface of a topological insulator opens the door to the creation of a pre-requisite for fault-tolerant quantum computing. One of those materials is graphene. Interestingly, the edges of graphene basically turn it into a kind of topological insulator that could be used in quantum computers.

Magic State Distillation — Magic State Distillation is the “magic” behind universal quantum computation. It is a particular approach to building noise-resistant quantum computers. To overcome the detrimental effects of unwanted noise, so-called “fault-tolerant” techniques are developed and employed. Magic states are an essential (but difficult to achieve and maintain) extra ingredient that boosts the power of a quantum device to achieve the improved processing power of a quantum computer.

Micro-drum — A micro-drum is a microscopic mechanical drum. It has been reported that physicists at the National Institute of Standards and Technology (NIST) have ‘entangled’ a microscopic mechanical drum with electrical signals confirming that it could be used as a quantum memory. NIST’s achievement also marks the first-ever entanglement of a macroscopic oscillator which expands the range of practical uses of the drum.

Quantum Contextuality — Quantum contextuality is a phenomenon necessary for the “magic” behind universal quantum computation. Physicists have long known that measuring things at the quantum level establishes a state that didn’t exist before the measurement. In other words, what you measure necessarily depends on how you carried out the observation – it depends on the ‘context’ of the experiment. Scientists at the Perimeter Institute for Theoretical Physics have discovered that this context is the key to unlocking the potential power of quantum computation.

Quantum Logic Gates — In quantum computing and specifically the quantum circuit model of computation, a quantum gate (or quantum logic gate) is a basic quantum circuit operating on a small number of qubits. They are the building blocks of quantum circuits, like classical logic gates are for conventional digital circuits. Quantum logic gates are represented by unitary matrices. The most common quantum gates operate on spaces of one or two qubits, just like the common classical logic gates operate on one or two bits. This means that as matrices, quantum gates can be described by 2 × 2 or 4 × 4 unitary matrices. Quantum gates are usually represented as matrices. A gate which acts on k qubits is represented by a 2k x 2k unitary matrix. The number of qubits in the input and output of the gate have to be equal.

Quantum Memory State — Quantum Memory State is the state in which qubits much sustain themselves in order to be of value in quantum computing. To date, these states have proved extremely fragile because the least bit of interference at the quantum level can destroy them. For this reason, most experiments with qubits require particles to be cooled to near absolute zero as well as heavy shielding.

Quantum Register — A collection of n qubits is called a quantum register of size n.

Qubit — A quantum bit or qubit operates in the weirdly wonderful world of quantum mechanics. At that level, subatomic particles can exist in multiple states at once. This is multi-state capability is called superposition. One commonly used particle is the electron, and state normally used to generate a superposition is spin (spin up could represent a “0” and spin down could represent a “1”. Additional qubits can be added by a process called entanglement. Theoretically, each extra entangled qubit doubles the number of parallel operations that can be carried out. MIT’s Seth Lloyd notes, “We could map the whole Universe — all of the information that has existed since the Big Bang — onto 300 qubits.”

Spin — Spin sometimes called “nuclear spin” or “intrinsic spin” is the quantum version form of angular momentum carried by elementary particles, composite particles (hadrons), and atomic nuclei. Having said that, the website Ask a Mathematician/Ask a Physicist states, “Spin has nothing to do with actual spinning. … Physicists use the word ‘spin’ or ‘intrinsic spin’ to distinguish the angular momentum that particles ‘just kinda have’ from the regular angular momentum of physically rotating things.”

Superposition — Superposition is an ambiguous state in which a particle can be both a “0” and a “1”.

Topological Qubit — Topological qubits rely on a rare and extraordinarily finicky quantum state; however, once formed, they theoretically would behave like sturdy knots — resistant to the disturbances that wreck the delicate properties of every other kind of qubit.

Transmon — A transmon is a superconducting loop-shaped qubit that can be created at extremely low temperatures and, at the moment, up to five of them can be linked together. A standard transmon can maintain its coherence for around 50 microseconds – long enough to be used in quantum circuits. What’s more, coherence times twice that length, and transmon arrays of 10 to 20 loops, are supposedly just around the corner.

Xmon — An Xmon is a cross-shaped qubit created by a team at the University of California, Santa Barbara. The team found that by placing five Xmons in a single row (with each qubit talking to its nearest neighbor) they were able to create a stable and effective quantum arrangement that provides the most stability and fewest errors. Like most other qubits, the Xmon must be created at temperatures approaching absolute zero.

Glossary AI And Quantum Computer Words

Abstraction—A different model (a representation or way of thinking) about a computer system design that allows the user to focus on the critical aspects of the system components to be designed.

Adiabatic quantum computer—An idealized analog universal quantum computer that operates at 0 K (absolute zero). It is known to have the same computational power as a gate-based quantum computer.

Algorithm—A specific approach, often described in mathematical terms, used by a computer to solve a certain problem or carry out a certain task.

Analog computer—A computer whose operation is based on analog signals and that does not use Boolean logic operations and does not reject noise.

Analog quantum computer—A quantum computer that carries out a computation without breaking the operations down to a small set of primitive operations (gates) on qubits; there is currently no model of full fault tolerance for such machines.

Analog signal—A signal whose value varies smoothly within a range of real or complex numbers.

Asymmetric cryptography (also public key cryptography)—A category of cryptography where the system uses public keys that are widely known and private keys that are secret to the owner; such systems are commonly used for key exchange protocols in the encryption of most of today’s electronic communications.

Basis—Any set of linearly independent vectors that span their vector space. The wave function of a qubit or system of qubits is commonly written as a linear combination of basis functions or states. For a single qubit, the most common basis is {| 0⟩, | 1⟩}, corresponding to the states of a classical bit.

Binary representation—A series of binary digits where each digit has only two possible values, 0 or 1, used to encode data and upon which machine-level computations are performed.

Certificate authority—An entity that issues a digital certificate to certify the ownership of a public key used in online transactions.

Cipher—An approach to concealing the meaning of information by encoding it.

Ciphertext—The encrypted form of a message, which appears scrambled or nonsensical.

Classical attack—An attempt by a classical computer to break or subvert encryption.

Classical computer—A computer—for example, one of the many deployed commercially today—whose processing of information is not based upon quantum information theory.

Coding theory—The science of designing encoding schemes for specific applications—for example, to enable two parties to communicate over a noisy channel.

Coherence—The quality of a quantum system that enables quantum phenomena such as interference, superposition, and entanglement. Mathematically speaking, a quantum system is coherent when the complex coefficients of the contributing quantum states are clearly defined in relation to each other, and the system can be expressed in terms of a single wave function.

Collapse—The phenomenon that occurs upon measurement of a quantum system where the system reverts to a single observable state, resulting in the loss of contributions from all other states to the system’s wave function.

Collision—In hashing, the circumstance where two different inputs are mapped to the same output, or hash value.

Complexity class—A category that is used to define and group computational tasks according to their complexity.

Computational complexity—The difficulty of carrying out a specific computational task, typically expressed as a mathematical expression that reflects how the number of steps required to complete the task varies with the size of the input to the problem.

Compute depth—The number of sequential operations required to carry out a given task.

Concatenation—The ordered combination of two sequences in order. In the context of quantum error correction (QEC), this refers to carrying out two or more QEC protocols sequentially.

Control and measurement plane—An abstraction used to describe components of a quantum computer, which refers to the elements required to carry out operations on qubits and to measure their states.

Control processor plane—An abstraction used to describe components of a quantum computer, which includes the classical processor responsible for determining what signals and measurements are required to implement a quantum program.

Cryostat—A device that regulates the temperature of a physical system at very low temperatures, generally in an experimental laboratory.

Cryptanalysis—The use of a computer to defeat encryption.

Cryptography—The study and practice of encoding information in order to obfuscate its content that relies upon the difficulty of solving certain mathematical problems.

Cryptosystem—A method of deploying a specific cryptographic algorithm to protect data and communications from being read by an unintended recipient.

Decoherence—A process where a quantum system will ultimately exchange some energy and information with the broader environment over time, which cannot be recovered once lost. This process is one source of error in qubit systems. Mathematically speaking, decoherence occurs when the relationship between the coefficients of a quantum system’s contributing states become ill-defined.

Decryption algorithm—A set of instructions for returning an encrypted message to its unencrypted form. Such an algorithm takes as input a cipher text and its encryption key, and returns a cleartext, or readable, version of the message.

Digital gate—A transistor circuit that performs a binary operation using a number of binary single bit inputs to create a single-bit binary output.

Digital quantum computer—A quantum system where the computation is done by using a small set of primitive operations, or gates, on qubits.

Digital signature—An important cryptographic mechanism used to verify data integrity.

Dilution refrigerator—A specialized cooling device capable of maintaining an apparatus at temperatures near absolute zero.

Discrete-log problem on elliptic curves—A specific algebraic problem used as the basis of a specific cryptographic protocol where, given the output, it is computationally hard to compute the inputs.

Distance—In an error-correcting code, the number of bit errors that would be required to convert one valid state of a computer to another. When the number of errors is less than (D–1)/2, one can still extract the error-free state.

Encryption—The application of cryptography to protect information, currently widely used in computer systems and Internet communications.

Encryption algorithm—A set of instructions for converting understandable data to an incomprehensible cipher, or ciphertext. In practice, the algorithm takes as input the message to be encrypted along with an encryption key and scrambles the message according to a mathematical procedure.

Entanglement—The property where two or more quantum objects in a system are correlated, or intrinsically linked, such that measurement of one changes the possible measurement outcomes for another, regardless of how far apart the two objects are.

Error-corrected quantum computer—An instance of a quantum computer that emulates an ideal, fault-tolerant quantum computer by running a quantum error correction algorithm.

Fault tolerant—Resilient against errors.

Fidelity—The quality of a hardware operation, sometimes quantified in terms of the probability that a particular operation will be carried out correctly.

Fundamental noise—Noise resulting from energy fluctuations arising spontaneously within any object that is above absolute zero in temperature.

Gate—A computational operation that takes in and puts out one or more bits (in the case of a classical computer) or qubits (in the case of a quantum computer).

Gate synthesis—Construction of a gate out of a series of simpler gates.

Hamiltonian—A mathematical representation of the energy environment of a physical system. In the mathematics of quantum mechanics, a Hamiltonian takes the form of a linear algebraic operator. Sometimes, the term is used to denote the physical environment itself, rather than its mathematical representation.

Host processor—An abstraction used to describe the components of a quantum computing system, referring to the classical computer components driving the part of the system that is user controlled.

Key exchange—A step in cryptographic algorithms and protocols where keys are shared among intended recipients to enable their use in encrypting and decrypting information.

Logical qubit—An abstraction that describes a collection of physical qubits implementing quantum error correction in order to carry out a fault-tolerant qubit operation.

Logic gate—In classical computing, a collection of transistors that input and output digital signals, and that can be represented and modeled using Boolean logic (rules that combine signals that can be either false, 0, or true, 1).

Lossless—No energy is dissipated.

Measurement—Observation of a quantum system, which yields only a single classical output and collapses the system’s wave function onto the corresponding state.

Microprocessor—An integrated circuit that contains the elements of a central processing unit on a single chip.

Noise—Unwanted variations in a physical system that can lead to error and unwanted results.

Noise immunity—The ability to remove noise (unwanted variations) in a signal to minimize error.

Noisy intermediate-scale quantum (NISQ) computer—A quantum computer that is not error-corrected, but is stable enough to effectively carry out a computation before the system loses coherence. A NISQ can be digital or analog.

Nondeterministic polynomial time (NP)—A specific computational complexity class.

One-way functions—Functions that are easy to compute in one direction while being for all intents and purposes impossible to compute in the other direction.

Overhead—The amount of work (for example, number of operations) or quantity of resources (for example, number of qubits or bits) required to carry out a computational task; “cost” is sometimes used synonymously.

Post-quantum cryptography—The set of methods for cryptography that are expected to be resistant to cryptanalysis by a quantum computer.

Primitive—A fundamental computational operation.

Program—An abstraction that refers to the sequence of instructions and rules that a computer must perform in order to complete one or more tasks (or solve one or more tasks) using a specific approach, or algorithm.

Quantum annealer—An analog quantum computer that operates through coherent manipulation of qubits by changing the analog values of the system’s Hamiltonian, rather than by using quantum gates. In particular, a quantum annealer performs computations by preparing a set of qubits in some initial state and changing their energy environment until it defines the parameters of a given problem, such that the final state of the qubits corresponds, with a high probability, to the answer of the problem. In general, a quantum annealer is not necessarily universal—there are some problems that it cannot solve.

Quantum communication—The transport or exchange of information as encoded into a quantum system.

Quantum computation—The use of quantum mechanical phenomena such as interference, superposition, and entanglement to perform computations that are roughly analogous to (although operate quite differently from) those performed on a classical computer.

Quantum computer—The general term for a device (whether theoretical or practically realized) that carries out quantum computation. A quantum computer may be analog or gate-based, universal or not, and noisy or fault tolerant.

Quantum cryptography—A subfield of quantum communication where quantum properties are used to design communication systems that may not be eavesdropped upon by an observer.

Quantum information science—The study of how information is or can be encoded in a quantum system, including the associated statistics, limitations, and unique affordances of quantum mechanics.

Quantum interference—When states contributing to coherent superpositions combine constructively or destructively, like waves, with coefficients adding or subtracting.

Quantum sensing and metrology—The study and development of quantum systems whose extreme sensitivity to environmental disturbances can be exploited in order to measure important physical properties with more precision than is possible with classical technologies.

Quantum system—A collection of (typically very small) physical objects whose behavior cannot be adequately approximated by equations of classical physics.

Qubit—A quantum bit, the fundamental hardware component of a quantum computer, embodied by a quantum object. Analogous to a classical

bit (or binary digit), a qubit can represent a state corresponding to either zero or one; unlike a classical bit, a qubit can also exist in a superposition of both states at once, with any possible relative contribution of each. In a quantum computer, qubits are generally entangled, meaning that any qubit’s state is inextricably linked to the state of the other qubits, and thus cannot be defined independently.

Run time—The amount of time required to carry out a computational task. In practice, the actual time required for a task depends heavily on the design of a device and of its particular physical embodiment, so run time may be described in terms of the number of computational steps.

Scalable, fault-tolerant, universal gate-based quantum computer—A system that operates through gate-based operations on qubits, analogous to circuit-based classical computers, and uses quantum error correction to correct any system noise (including errors introduced by imperfect control signals, or unintended coupling of qubits to each other or to the environment) that occurs during the time frame of the calculation.

SHA256—A specific hash function that outputs a 256-bit hash value regardless of the input size.

Shor’s algorithm— A quantum algorithm developed by Peter Shor in the 1990s that, if implemented on a real quantum computer of sufficient scale, would be capable of breaking the encryption used to protect Internet communications and data.

Signal—An electromagnetic field used to convey information in an electronic circuit.

Software tool—A computer program that helps a user design and compose a new computer program.

Standard cell library—A set of predesigned and tested logic gates.

Superposition—A quantum phenomenon where a system is in more than one state at a time. Mathematically speaking, the wave function of a quantum system in a superposition state is expressed as the sum of the contributing states, each weighted by a complex coefficient.

Surface code—A quantum error correction code (QECC) that is less sensitive to noise than other established QECCs, but has higher overheads.

Symmetric encryption—A type of encryption where a secret key, shared by both the sender and the receiver, is used to encrypt and decrypt communications.

Systematic noise—Noise resulting from signal interactions that is always present under certain conditions and could in principle be modeled and corrected.

Transport Layer Security (TLS) handshake—The most common key exchange protocol, used to protect Internet traffic.

Unitary operation—An algebraic operation on a vector that preserves the vector length.

Universal computer—A computer that can perform any computation that could be performed by a Turing machine.

Wave function—A mathematical description of the state of a quantum system, so named to reflect their wave-like characteristics.

Wave-particle duality—The phenomenon where a quantum object is sometimes best described in terms of wave-like properties and sometimes in terms of particle-like properties.

-

40:55

40:55

What If Everything You Were Taught Was A Lie?

4 days agoVideo Clip Every American Must See Islam, New York & Zoran Mamdani The Take Over Of America

1.52K3 -

11:32:19

11:32:19

Dr Disrespect

15 hours ago🔴LIVE - DR DISRESPECT - ARC RAIDERS - STELLA MONTIS QUESTS

242K17 -

5:20:41

5:20:41

SpartakusLIVE

9 hours agoSolos on WZ to Start then ARC?! || Friends: UNBANNED

44.2K1 -

12:58

12:58

Cash Jordan

9 hours agoMexican MOB OVERTHROWS Capital... as "Socialist President" FLOODS AMERICA with CARTELS

32.7K14 -

23:13

23:13

Jasmin Laine

10 hours agoPBO Breaks His Silence—“This Is Soviet Stuff”… and the Panel EXPLODES

25.5K19 -

1:17:26

1:17:26

Jamie Kennedy

23 hours agoCatching Up With Deep Roy: JKX Stories, Star Wars Secrets, and Total Chaos | Ep 231 HTBITY

19.3K3 -

1:28:42

1:28:42

ThisIsDeLaCruz

5 hours ago $3.00 earnedThe Secrets Behind Madonna’s Legendary Live Sound

22.1K7 -

1:22:15

1:22:15

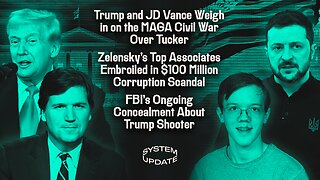

Glenn Greenwald

9 hours agoTrump and JD Vance Weigh in on the MAGA Civil War Over Tucker; Zelensky's Top Associates Embroiled in $100 Million Corruption Scandal; FBI's Ongoing Concealment About Trump Shooter | SYSTEM UPDATE #548

126K110 -

2:34:51

2:34:51

megimu32

5 hours agoON THE SUBJECT: 2000s Pop Punk & Emo Nostalgia — Why It Still Hits

22.6K6 -

3:44:13

3:44:13

VapinGamers

6 hours ago $1.68 earnedBattlefield RedSec - Getting Carried Maybe? I Need the Wins! - !rumbot !music

15.2K3