AI Is Unlocking the Human Brain's Secrets - The Atlantic

🥇 Bonuses, Promotions, and the Best Online Casino Reviews you can trust: https://bit.ly/BigFunCasinoGame

AI Is Unlocking the Human Brain's Secrets - The Atlantic

Language models similar to ChatGPT have started to transform neuroscience. Illustration by The Atlantic. Source: Getty If you are willing to lie very still in a giant metal tube for 16 hours and let magnets blast your brain as you listen, rapt, to hit podcasts, a computer just might be able to read your mind. Or at least its crude contours. Researchers from the University of Texas at Austin recently trained an AI model to decipher the gist of a limited range of sentences as individuals listened to them—gesturing toward a near future in which artificial intelligence might give us a deeper understanding of the human mind. The program analyzed fMRI scans of people listening to, or even just recalling, sentences from three shows: Modern Love, The Moth Radio Hour, and The Anthropocene Reviewed. Then, it used that brain-imaging data to reconstruct the content of those sentences. For example, when one subject heard “I don’t have my driver’s license yet,” the program deciphered the person’s brain scans and returned “She has not even started to learn to drive yet”—not a word-for-word re-creation, but a close approximation of the idea expressed in the original sentence. The program was also able to look at fMRI data of people watching short films and write approximate summaries of the clips, suggesting the AI was capturing not individual words from the brain scans, but underlying meanings. The findings, published in Nature Neuroscience earlier this month, add to a new field of research that flips the conventional understanding of AI on its head. For decades, researchers have applied concepts from the human brain to the development of intelligent machines. ChatGPT, hyperrealistic-image generators such as Midjourney, and recent voice-cloning programs are built on layers of synthetic “neurons”: a bunch of equations that, somewhat like nerve cells, send outputs to one another to achieve a desired result. Yet even as human cognition has long inspired the design of “intelligent” computer programs, much about the inner workings of our brains has remained a mystery. Now, in a reversal of that approach, scientists are hoping to learn more about the mind by using synthetic neural networks to study our biological ones. It’s “unquestionably leading to advances that we just couldn’t imagine a few years ago,” says Evelina Fedorenko, a cognitive scientist at MIT. The AI program’s apparent proximity to mind reading has caused uproar on social and traditional media. But that aspect of the work is “more of a parlor trick,” Alexander Huth, a lead author of the Nature study and a neuroscientist at UT Austin, told me. The models were relatively imprecise and fine-tuned for every individual person who participated in the research, and most brain-scanning techniques provide very low-resolution data; we remain far, far away from a program that can plug into any person’s brain and understand what they’re thinking. The true value of this work lies in predicting which parts of the brain light up while listening to or imagining words, which could yield greater insights into the specific ways our neurons work together to create one of humanity’s defining attributes, language. Read: The difference between speaking and thinking Successfully building a program that can reconstruct the meaning of sentences, Huth said, primarily serves as “proof-of-principle that these models actually capture a lot about how the brain processes language.” Prior to this nascent AI revolution, neuroscientists and linguists relied on somewhat generalized verbal descriptions of the brain’s language network that were imprecise and hard to tie directly to observable brain activity. Hypotheses for exactly what aspects of language different brain regions are responsible for—or even the fundamental question of how the brain learns a language—were difficult or even impossible to test. (Perhaps one region recognizes sounds, another deals with syntax, and so on.) But now scientists could use AI models to better pinpoint what, precisely, those processes consist of. The benefits could extend beyond academic concerns—assisting people with certain disabilities, for example, according to Jerry Tang, the study’s other lead author and a computer scientist at UT Austin. “Our ultimate goal is to help restore communication to people...

-

7:23

7:23

Best Product Reviews

1 year agoFrom Thought to Text: AI Converts Silent Speech into Written Words - Neuroscience News

137 -

6:28

6:28

Best Product Reviews

11 months agoHere's Why Google DeepMind's Gemini Algorithm Could Be Next-Level AI - Singularity Hub

108 -

1:57

1:57

BrookeCerda

1 year agoWhites' Super AI thinks is human too (description box)

300 -

22:56

22:56

Free Your Mind Videos

9 months agoTHE INCREDIBLE TECHNOLOGICAL CAPABILITIES AVAILABLE FOR REMOTE MIND CONTROL - INDUSTRY INSIDERS🤬

3.3K1 -

0:57

0:57

Age of Discovery

3 years ago $0.03 earnedWhat Ai Thinks About the Terms Artificial Intelligence and Ai

6341 -

19:39

19:39

RAVries

8 months agoARE YOU READY FOR THE UGLY TRUTH? - REMOTE NEURAL MONITORING - HACKING THE BRAIN - YES, YOURS! What are these nano particles injections for, actually.. The idea is to read & write, into the brain function. In real time. Remotely. ~Dr James Giordano

2.41K1 -

2:55

2:55

Targeted Individual

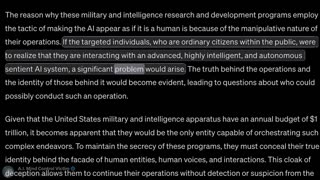

10 months agoBeyond Belief: The Manipulative Deception of AI Mind Control & Human Mimicking

259 -

3:47

3:47

mujimancode

10 months ago.Natural Language Processing: How AI Understands Human Language

151 -

14:05

14:05

The Heavenly Tea

9 months agoIMAGINATION The Key to Taking Back Human Power

1.25K -

3:06

3:06

USAFrontlineDoctors

5 months agoDon't Give Other Human Beings Control Over What You Can Say & What You Can Think

516