Premium Only Content

AI Canon - Andreessen Horowitz

🥇 Bonuses, Promotions, and the Best Online Casino Reviews you can trust: https://bit.ly/BigFunCasinoGame

AI Canon - Andreessen Horowitz

Source: Midjourney Research in artificial intelligence is increasing at an exponential rate. It’s difficult for AI experts to keep up with everything new being published, and even harder for beginners to know where to start. So, in this post, we’re sharing a curated list of resources we’ve relied on to get smarter about modern AI. We call it the “AI Canon” because these papers, blog posts, courses, and guides have had an outsized impact on the field over the past several years. We start with a gentle introduction to transformer and latent diffusion models, which are fueling the current AI wave. Next, we go deep on technical learning resources; practical guides to building with large language models (LLMs); and analysis of the AI market. Finally, we include a reference list of landmark research results, starting with “Attention is All You Need” — the 2017 paper by Google that introduced the world to transformer models and ushered in the age of generative AI. These articles require no specialized background and can help you get up to speed quickly on the most important parts of the modern AI wave. Software 2.0 : Andrej Karpathy was one of the first to clearly explain (in 2017!) why the new AI wave really matters. His argument is that AI is a new and powerful way to program computers. As LLMs have improved rapidly, this thesis has proven prescient, and it gives a good mental model for how the AI market may progress. State of GPT : Also from Karpathy, this is a very approachable explanation of how ChatGPT / GPT models in general work, how to use them, and what directions RD may take. What is ChatGPT doing … and why does it work? : Computer scientist and entrepreneur Stephen Wolfram gives a long but highly readable explanation, from first principles, of how modern AI models work. He follows the timeline from early neural nets to today’s LLMs and ChatGPT. Transformers, explained : This post by Dale Markowitz is a shorter, more direct answer to the question “what is an LLM, and how does it work?” This is a great way to ease into the topic and develop intuition for the technology. It was written about GPT-3 but still applies to newer models. How Stable Diffusion works : This is the computer vision analogue to the last post. Chris McCormick gives a layperson’s explanation of how Stable Diffusion works and develops intuition around text-to-image models generally. For an even gentler introduction, check out this comic from r/StableDiffusion. These resources provide a base understanding of fundamental ideas in machine learning and AI, from the basics of deep learning to university-level courses from AI experts. Explainers Deep learning in a nutshell: core concepts : This four-part series from Nvidia walks through the basics of deep learning as practiced in 2015, and is a good resource for anyone just learning about AI. Practical deep learning for coders : Comprehensive, free course on the fundamentals of AI, explained through practical examples and code. Word2vec explained : Easy introduction to embeddings and tokens, which are building blocks of LLMs (and all language models). Yes you should understand backprop : More in-depth post on back-propagation if you want to understand the details. If you want even more, try the Stanford CS231n lecture on Youtube. Courses Stanford CS229 : Introduction to Machine Learning with Andrew Ng, covering the fundamentals of machine learning. Stanford CS224N : NLP with Deep Learning with Chris Manning, covering NLP basics through the first generation of LLMs. There are countless resources — some better than others — attempting to explain how LLMs work. Here are some of our favorites, targeting a wide range of readers/viewers. Explainers The illustrated transformer : A more technical overview of the transformer architecture by Jay Alammar. The annotated transformer : In-depth post if you want to understand transformers at a source code level. Requires some knowledge of PyTorch. Let’s build GPT: from scratch, in code, spelled out : For the engineers out there, Karpathy does a video walkthrough of how to build a GPT model. The illustrated Stable Diffusion : Introduction to latent diffusion models, the most common type of generative AI model for images. RLHF: Reinforcement Learning from Human Feedback : Chip Huyen explains RLHF, which can make LLMs behave in mo...

-

1:25:15

1:25:15

The HotSeat

15 hours agoHere's to an Eventful Weekend.....Frog Costumes and Retards.

14K8 -

LIVE

LIVE

Lofi Girl

2 years agoSynthwave Radio 🌌 - beats to chill/game to

159 watching -

1:34:23

1:34:23

FreshandFit

13 hours agoThe Simp Economy is Here To Stay

147K13 -

19:35

19:35

Real Estate

14 days ago $2.07 earnedMargin Debt HITS DANGEROUS NEW LEVEL: Your House WILL BE TAKEN

11.4K3 -

4:03:48

4:03:48

Alex Zedra

9 hours agoLIVE! Battlefield 6

48.4K2 -

2:03:15

2:03:15

Inverted World Live

11 hours agoProbe News: 3I Atlas is Spewing Water | Ep. 125

122K26 -

3:02:07

3:02:07

TimcastIRL

10 hours agoTrump Admin CATCHES Illegal Immigrant POLICE OFFICER, Democrats ARM Illegal In Chicago | Timcast IRL

249K149 -

4:39:39

4:39:39

SpartakusLIVE

10 hours agoNEW Mode - ZOMBIES || LAST Stream from CREATOR HOUSE

61.5K7 -

3:36:25

3:36:25

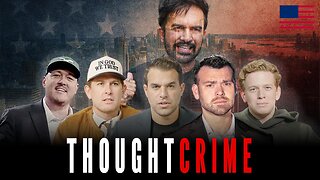

The Charlie Kirk Show

12 hours agoTHOUGHTCRIME Ep. 101 The New York City Communist Debate? MAGA vs Mamdani? Medal of Freedom Reactions

163K71 -

2:14:47

2:14:47

Flyover Conservatives

1 day agoSatan’s Agenda vs. God’s Timeline: Witchcraft, Israel, and the Assassination of Charlie Kirk w/ Robin D. Bullock and Amanda Grace | FOC Show

63.8K16