Premium Only Content

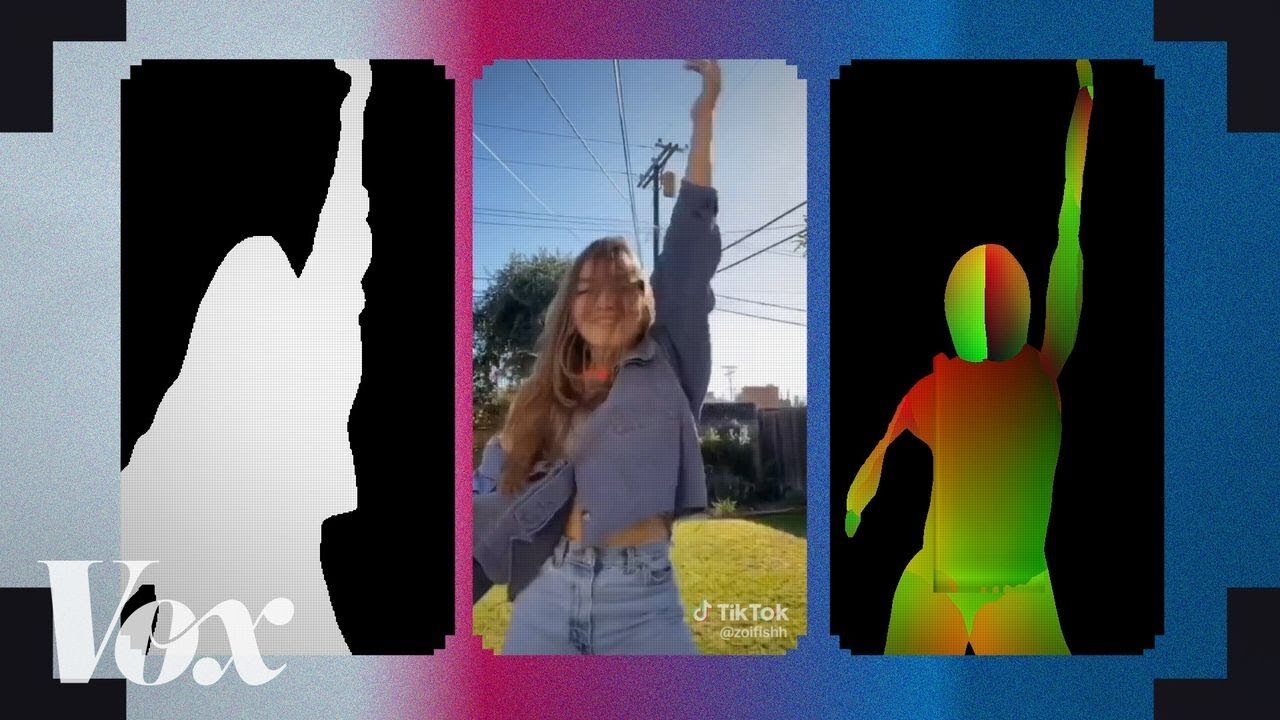

How TikTok dances trained an AI to see

And remember the Mannequin Challenge? Yep, they used that too.

Follow my channel for more informative videos

The quest for computer vision requires lots of data — including real world images. But that can be hard to find, which has led researchers to look in some pretty creative places.

The above video shows how researchers used Tik Tok dances and the Mannequin Challenge to train AI. The quest is for “ground truth” — real world examples that can be used to train or grade an AI on its guesses. Tik Tok datasets provide this by showing lots of movement, clothing types, backgrounds, and people. That diversity is key to train a model that can handle the randomness of the real world.

The same thing happens with the Mannequin Challenge — all those people pretending to stand still gave researchers — and their models — more real world data to train with than they ever could have hoped for.

Watch the above video to learn more.

Further Reading:

Here’s the original project pages for each researcher in the video:

Tik Tok aided depth: https://www.yasamin.page/hdnet_tiktok

Mannequin Challenge: https://google.github.io/mannequincha...

Geofill and Reference-Based Inpainting: https://paperswithcode.com/paper/geof...

Virtual Correspondence: https://virtual-correspondence.github...

Densepose: http://densepose.org/

Make sure you never miss behind the scenes content in the Vox Video newsletter, sign up here: http://vox.com/video-newsletter

-

5:43:44

5:43:44

Scammer Payback

2 days agoCalling Scammers Live

111K17 -

18:38

18:38

VSiNLive

1 day agoProfessional Gambler Steve Fezzik LOVES this UNDERVALUED Point Spread!

84.7K11 -

LIVE

LIVE

Right Side Broadcasting Network

10 days agoLIVE REPLAY: President Donald J. Trump Keynotes TPUSA’s AmFest 2024 Conference - 12/22/24

6,389 watching -

4:31

4:31

CoachTY

20 hours ago $16.87 earnedCOINBASE AND DESCI !!!!

81K8 -

10:02

10:02

MichaelBisping

19 hours agoBISPING: "Was FURY ROBBED?!" | Oleksandr Usyk vs Tyson Fury 2 INSTANT REACTION

37.2K8 -

8:08

8:08

Guns & Gadgets 2nd Amendment News

2 days ago16 States Join Forces To Sue Firearm Manufacturers Out of Business - 1st Target = GLOCK

78.2K67 -

10:17

10:17

Dermatologist Dr. Dustin Portela

2 days ago $17.02 earnedOlay Cleansing Melts: Dermatologist's Honest Review

122K6 -

1:02:20

1:02:20

Trumpet Daily

2 days ago $38.34 earnedObama’s Fake World Comes Crashing Down - Trumpet Daily | Dec. 20, 2024

80.3K55 -

6:29

6:29

BIG NEM

1 day agoCultivating God Mode: Ancient Taoist NoFap Practices

59.8K9 -

30:53

30:53

Uncommon Sense In Current Times

2 days ago $10.37 earned"Pardon or Peril? How Biden’s Clemency Actions Could Backfire"

75.1K5