Premium Only Content

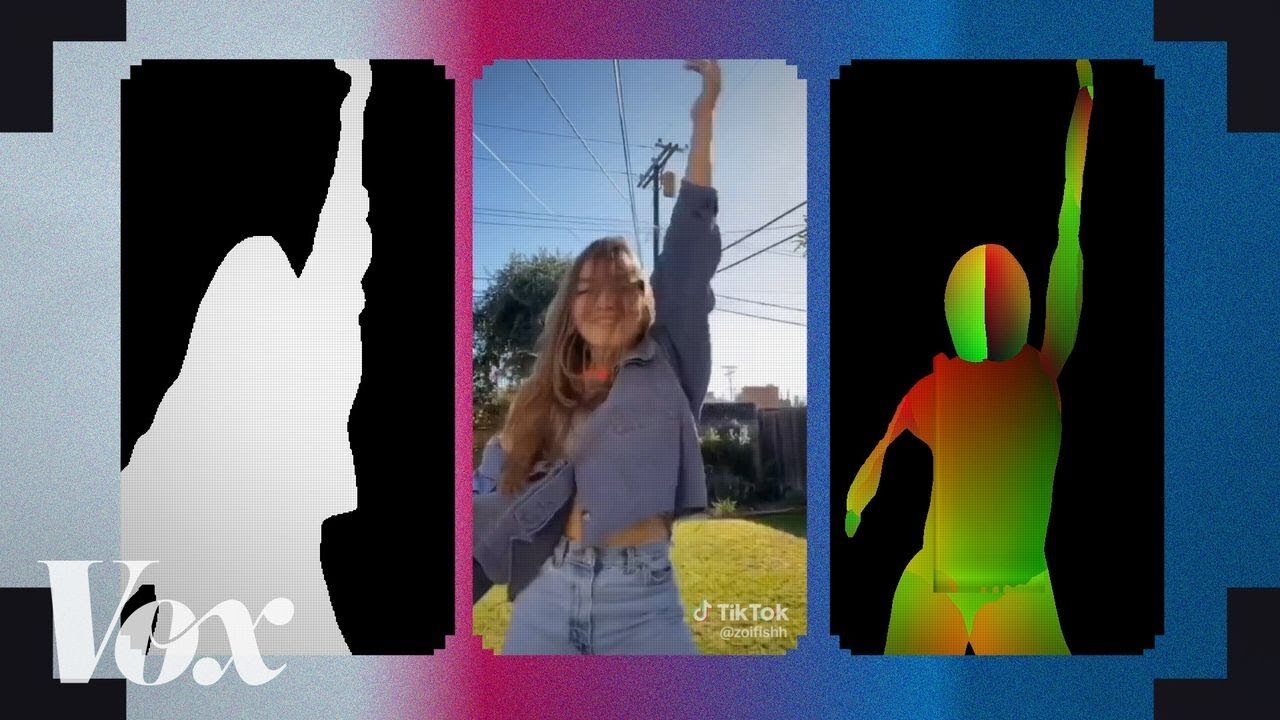

How TikTok dances trained an AI to see

And remember the Mannequin Challenge? Yep, they used that too.

Follow my channel for more informative videos

The quest for computer vision requires lots of data — including real world images. But that can be hard to find, which has led researchers to look in some pretty creative places.

The above video shows how researchers used Tik Tok dances and the Mannequin Challenge to train AI. The quest is for “ground truth” — real world examples that can be used to train or grade an AI on its guesses. Tik Tok datasets provide this by showing lots of movement, clothing types, backgrounds, and people. That diversity is key to train a model that can handle the randomness of the real world.

The same thing happens with the Mannequin Challenge — all those people pretending to stand still gave researchers — and their models — more real world data to train with than they ever could have hoped for.

Watch the above video to learn more.

Further Reading:

Here’s the original project pages for each researcher in the video:

Tik Tok aided depth: https://www.yasamin.page/hdnet_tiktok

Mannequin Challenge: https://google.github.io/mannequincha...

Geofill and Reference-Based Inpainting: https://paperswithcode.com/paper/geof...

Virtual Correspondence: https://virtual-correspondence.github...

Densepose: http://densepose.org/

Make sure you never miss behind the scenes content in the Vox Video newsletter, sign up here: http://vox.com/video-newsletter

-

2:40:21

2:40:21

BlackDiamondGunsandGear

4 hours agoAre ALL Striker Fired Pistols UNSAFE? // After Hours Armory

34.6K4 -

6:34:50

6:34:50

SpartakusLIVE

9 hours ago#1 Saturday Spartoons on RUMBLE PREMIUM

106K7 -

1:04:59

1:04:59

Man in America

10 hours ago“Summoning the Demon” — The AI Agenda Is FAR WORSE Than We Know w/ Kay Rubacek

41.2K22 -

2:16:48

2:16:48

Tundra Tactical

8 hours ago $0.08 earned🎯💥 The World’s Okayest Gun Show 🔫😂 | LIVE Tonight on Rumble!

23.9K -

3:36:03

3:36:03

Mally_Mouse

1 day ago🌶️ 🥵Spicy BITE Saturday!! 🥵🌶️- Let's Play: Tower Unite!

49.1K2 -

58:59

58:59

MattMorseTV

8 hours ago $1.29 earned🔴Trump just BROKE Newsom.🔴

64.3K76 -

18:14

18:14

Her Patriot Voice

8 hours agoWho Is WORSE for NYC: Trump Girl or Socialist?

45.8K31 -

3:39:42

3:39:42

SavageJayGatsby

7 hours agoSpicy Saturday with Mally! | Road to 100 | $300 Weekly Goal for Spicy Bites!

45.7K1 -

3:35:50

3:35:50

FomoTV

9 hours ago🚨 Swamp Theater: FBI Raids Bolton 🕵 Still NO Epstein Files, Trump's Troops & the Red Heifer Hoax 🐂 | Fomocast 08.23.25

20.3K7 -

6:04:40

6:04:40

Akademiks

12 hours agoRoc Nation & Meg Thee Stallion did a 7 HOUR Deposition with me. Drake Secret Kid Finally Revealed.

57.2K2